DEW #128 - AI Detection Engineering Uncertainty, 3D Threat Hunting and Salesloft Drift Shenanigans

and the Bills win season opener #gobills

Welcome to Issue #128 of Detection Engineering Weekly!

✍️ Musings from the life of Zack in the last week

I’ve had to cancel work travel to Datadog’s Paris Office due to an Air Traffic Controller strike

I’m very close to canceling ChatGPT and using Claude exclusively. It’s an excellent threat research and engineering co-pilot

You’ll read more about this below, but I’m getting more freaked out about developers as targets by threat actors. Attacks against dev tooling ecosystems and macOS are increasing, and both of these target sets are a mainstay for many firms’ operations

⏪ Check out last week’s issue if you’ve missed it!

This Week’s Sponsor: Material Security

Fortify Your Google Workspace, from Gmail to Drive.

Protect the email, files, and accounts within Google Workspace from every angle. Material Security unifies advanced threat detection, data loss prevention, and rapid response within a single automated platform so your lean team can do more with less. Deploy in minutes, integrate with your SIEM, and let “set-it-and-forget-it” automation run 24/7.

Gain enterprise-grade security without enterprise overhead.

💎 Detection Engineering Gem 💎

The experience of the analyst in an AI-powered present by Julien Vehent

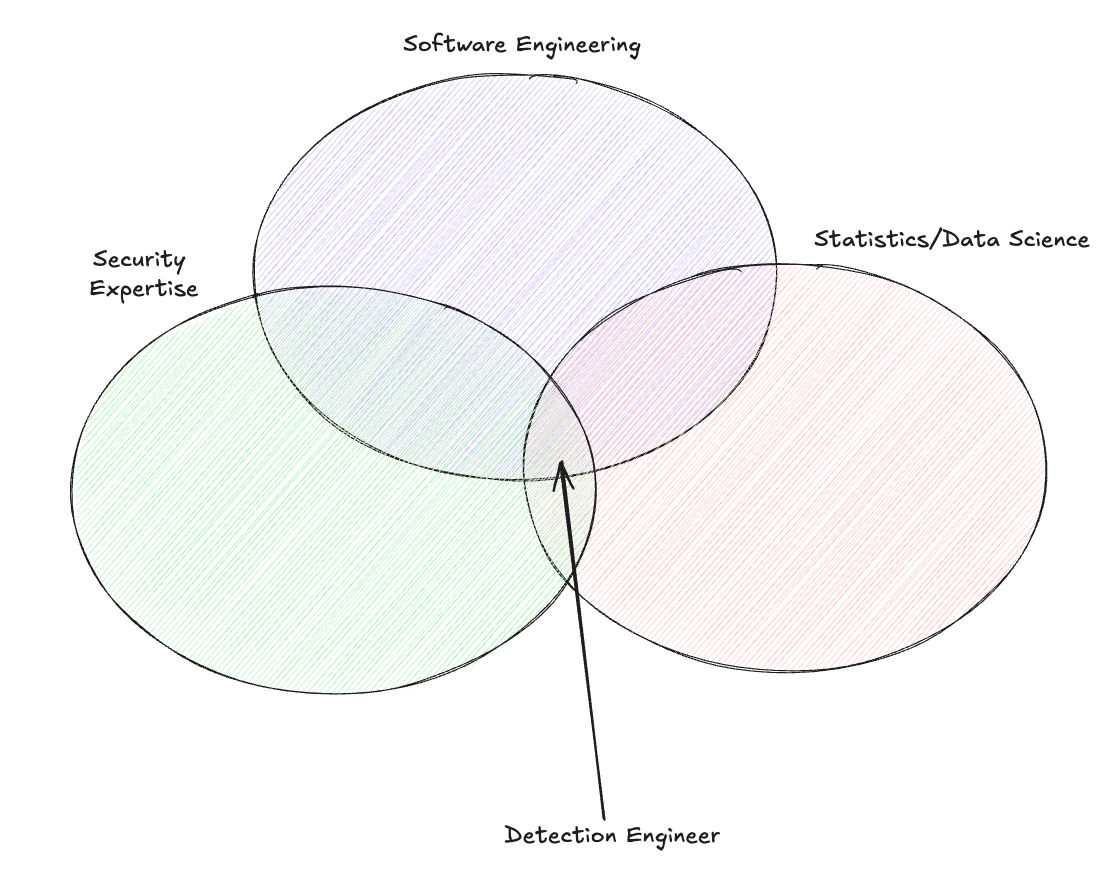

When I think of detection engineers, I think of three disciplines: software engineering, security analysis, and statistics. I wrote about the first two of these disciplines in Field Manual #1, and started touching on statistics in Field Manual #3. The Detection Engineering Mix is born of evolution and the necessity of a business. With limited human capacity paired with rapidly developing technology, you’ll need to adjust these three knobs in different configurations to prevent ticket queueing and alert fatigue.

What happens when you add a fourth circle to the Detection Engineering Mix? It would seem overwhelming and infeasible because the expectations of software engineering, security expertise, and statistics set the bar reasonably high. This is where the AI element comes into play. If you had told me it was a necessity two years ago, I would have told you to kick rocks. Now I’m not so sure.

In this post, Vehent offers his commentary on the uncertainty surrounding the integration of AI engineering and automation into our collective approach to threat detection. His issue with the mix, AI or not, is that new security engineers overemphasize aspects other than security expertise. This makes sense in some ways because software engineering is how we are supposed to “scale”, and it’s a hard requirement for security positions in more modern organizations.

He argues that AI will move us further away from the security expertise circle above, and we’ll lose the analytical rigor along with it. Detection and response teams should have their work cut out for them to triage and investigate, as it helps them experience the pain that software engineering, data science, and now AI can help solve. If they don’t feel the pain, how can we know when a security problem is solved?

🔬 State of the Art

Can't Hide in 3D by Certis Foster

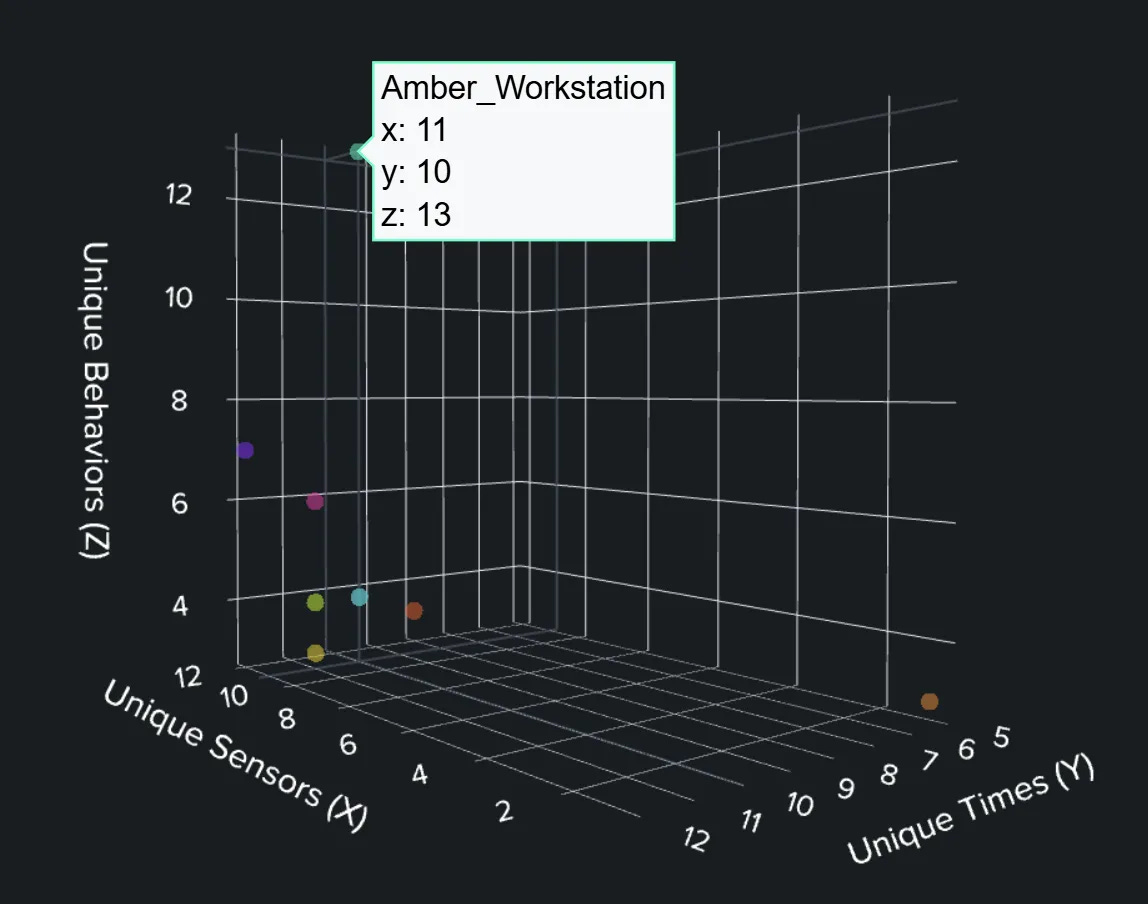

Time-Terrain-Behavior is a threat detection modeling framework developed by MITRE that examines how three types of metadata from security telemetry can help pinpoint compromises. It’s a neat paper, so if you have time, get a cup of tea/coffee/drink and some other hygge items and check it out. These dimensions combine three alerting capabilities that we typically use in an atomic sense. Time is the baseline for when the machine is active. Terrain examines security tooling that generates observations on data from the machine, and behavior baselines for benign workstation activity.

Foster’s approach here is to apply the concept of TTB to a real dataset and assess its performance. They took Splunk’s Boss of the SOC (BOTS) Frothly dataset, loaded it into a SIEM, and laid out a blueprint to implement and find compromises using TTB. They broke down each TTB component into three sets of labels. Time{Morning, Evening, Weekend}, Terrain {Windows, Network, Cloud} and Behavior {Authentication, Execution, Access} all as distinct counts. Each distinct label was categorized even further, they plotted workstations out using some incredible SPL queries and got this:

This identified one station as an outlier, and Amber was the one that was compromised! No detection rules, just some clever labeling, intuition after reading the TTB paper, and security expertise. I’m a big fan of this type of vectorized approach to threat detection, though I see a few problems that Foster also addresses:

They can be cost-prohibitive if you have 1000s to 10000s of assets to protect

They lose specificity when an actor does something during core hours, so you’ll see a larger distance on sensor hits, but it may underfit on other components like time

Sensor hits here can also mess up specificity if your tooling is inadequate

I do love this mathy approach!

AWS CloudTrail Event Cheatsheet: A Detection Engineer’s Guide to Critical API Calls — Part 1 by Muh. Fani (Rama) Akbar

This piece contains a comprehensive compendium (alliteration, woo!) of security and detection-relevant AWS API calls for folks who want to learn more about AWS detection engineering, the most critical and cost-effective way to do it via AWS CloudTrail. CloudTrail is the control plane log source for AWS, and control plane events refer to administrative actions in this context. Administrator activity, Role creation, and Secret and Key creation are all examples of administrative actions.

So, Akbar took this a step further and mapped out as many security-relevant CloudTrail logs as possible across the MITRE ATT&CK chain. Each MITRE section contains the key events, attacker activities using the AWS CLI, and the relevant SQL detection rule. The reason I love these types of blogs is not just for the educational content, but for the provable elements of the detection. You can run the attack commands on the red team side and observe how they log on the blue team side.

Leveraging Raw Disk Reads to Bypass EDR by Christopher Ellis, Andrew Steinberg and Austin Munsch

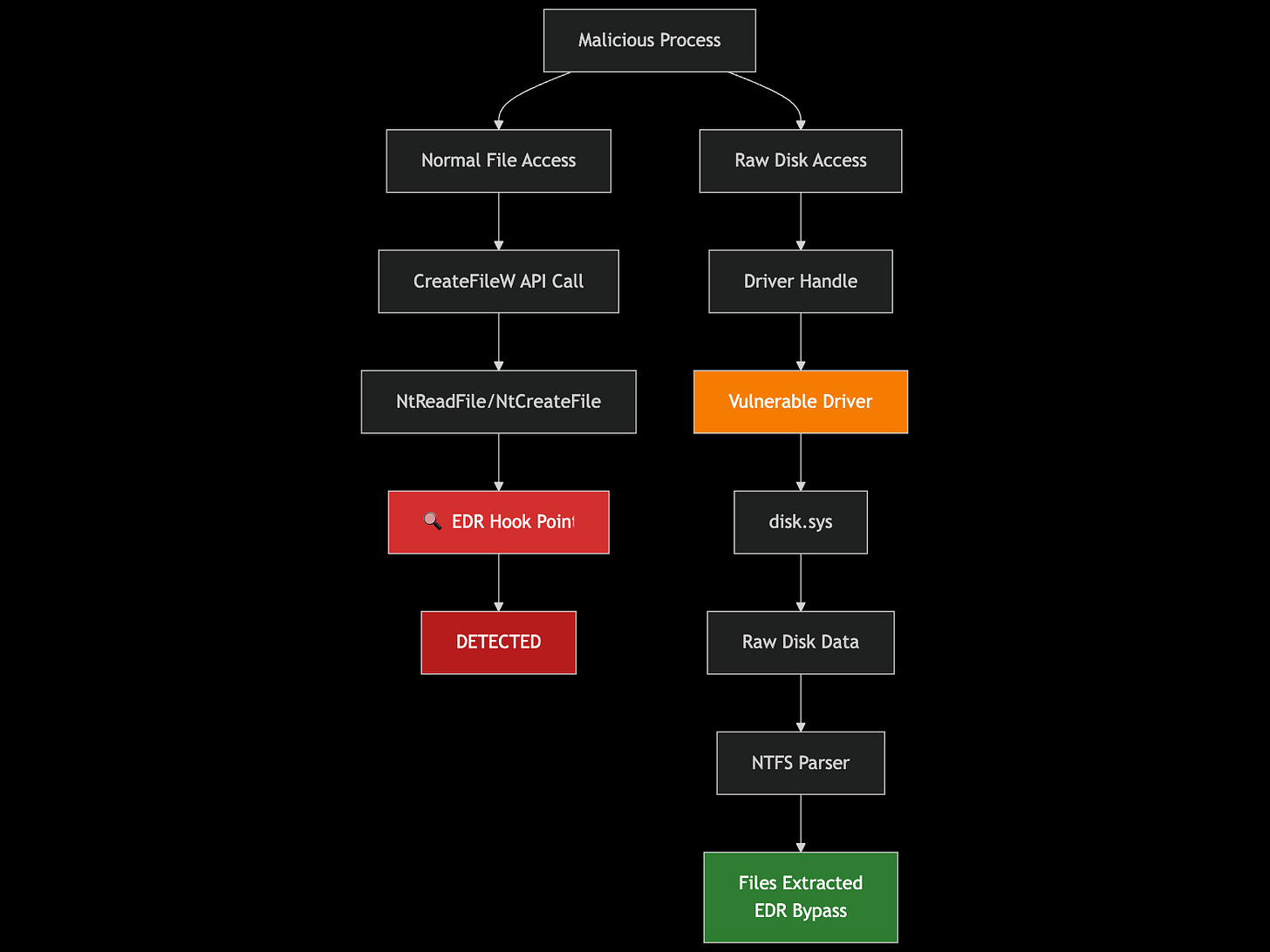

This piece by the offensive security team at Workday exposes how to leverage raw disk reads to the harddrive to bypass controls and monitoring from a modern EDR. The most interesting aspect of this research is that it extends beyond the Windows Operating System (OS) and directly into the process of building your own driver to interact with physical devices. Windows contains several layers of APIs in user-land and kernel space. Some of these layers are a result of compatibility, which Microsoft obsesses over, so that you can ship code and have interoperability with their ecosystem.

These APIs are what EDRs monitor. Function hooking via Kernel callbacks, ETW monitoring, and system filter drivers provides EDR vendors with several ways to detect malicious activity in a process, files, or a user session. But if you can connect to a driver directly, you can avoid these mechanisms and, in this case, read data from sensitive files.

Ellis, Steinberg, and Munsch did just that. If you have a BYOVD or a user with permissions to access the driver, you can run their PoC, which performs the raw disk read, parsing and searching for something like the Windows SAM file; you can do a fileless read without triggering an EDR. Here’s a mermaid diagram I created with Claude to visualize the attack flow:

Software Development Nuggets for Security Analysts Part 2: The Browser by David Burkett

This is a continuation of a blog post from David’s Part 1, released almost three years ago. I love the framing of trying to understand a security concept by first understanding why a software developer, or in this case, a critical piece of software like a web browser, would implement their code in a particular manner. In this case, Burkett studies why browsers are an attractive target for threat actors. But, to understand why it’s appealing, breaks down the threat model of their architecture.

The threat model revolves around how a Browser stores and protects sensitive data, such as cookies, session, and other browser artifacts like extensions that store this type of data. These have to be stored somewhere, so he uses Velociraptor, a forensics tool, to analyze file directories and file names where a threat actor may attempt to read and exfiltrate that data.

☣️ Threat Landscape

When interesting threat landscape news emerges that warrants a deeper discussion, I typically post it under Threat Landscape. Still, I minimize the amount of analysis I provide due to space constraints. I’m going to try something a little different here and go deep on stories from time to time.

⚡ Emerging Spotlight: Salesloft Breach and the Victimology of Developer Tooling and Environments

Update on Mandiant Drift and Salesloft Application Investigations by Salesloft

Myself, like many other detection and threat intel folks, have availability bias. We apply our analysis of the news stories and incident details based on the available information, and in detection-speak, that falls within our expertise and the sources we typically build detections around. Without news stories or internal incident data, it’s challenging for us to determine whether something is worth defending.

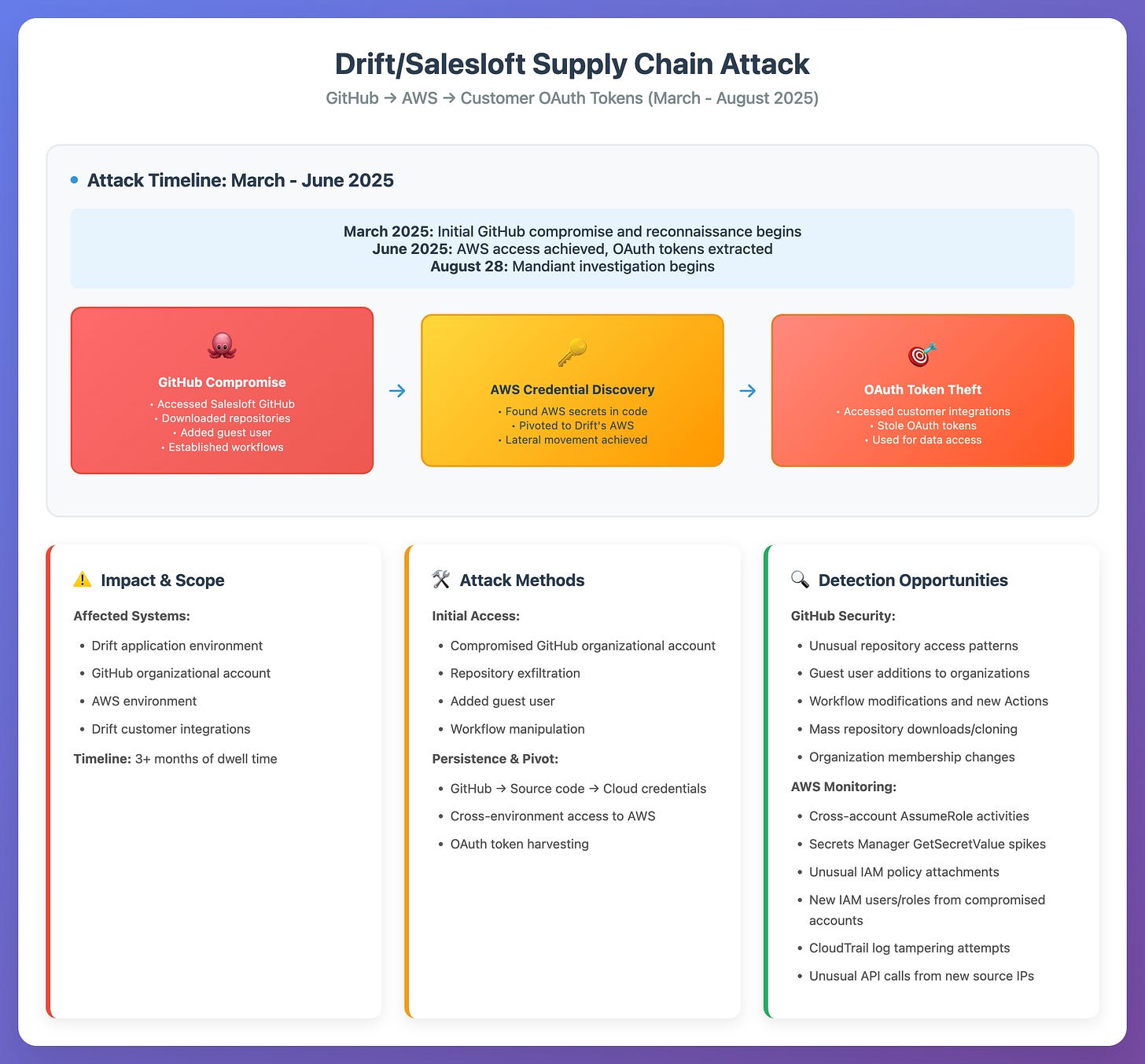

I posted the Drift compromise details in my last issue, but what I’ve linked here is their investigative notes on the breach timeline. I said this on my LinkedIn post, but it reads like a modern red team report. The initial access is unknown, but the actor gained access to Drift’s GitHub organization, performed reconnaissance, and established persistence. They exfiltrated their codebase and then pivoted to their AWS environment, where they extracted OAuth secrets from their customers with the Drift integration. This is where the threat actor began accessing Drift customers and extracting additional data, including Salesforce information, extra secrets, and customer data.

My availability bias here is that this is the first time this has ever occurred, with pivoting techniques affecting GitHub, AWS, and an Application during the compromise chain. I’m amazed and terrified because it’s showcasing that the new victimology, which threat actors target more and more, is developers and their tools.

Below is the breach diagram I made with Claude and posted on social media.

⚡ Quick Hits

NPM debug and chalk packages compromised (HackerNews post)

A single NPM maintainer had their NPM account compromised, resulting in the backdooring of 18 packages with over 2 billion weekly downloads. This is the HackerNews post (his reply is the first one), and it reaffirms my point in the above spotlight post: Threat actors are targeting Developers and their tools.

Contagious Interview | North Korean Threat Actors Reveal Plans and Ops by Abusing Cyber Intel Platforms by Aleksandar Milenkoski, Sreekar Madabushi (Validin) and Kenneth Kinion (Validin)

SentinelOne and researchers from Validin identified a cluster of DPRK actors leveraging Validin to hunt for their command and control (C2) infrastructure in Internet-wide scan data. The amusing part here is that as they set up monitoring and queried these platforms to identify their infrastructure, they in turn provided Validin and SentinelOne with the queries to locate their infrastructure.

s1ngularity's Aftermath: AI, TTPs, and Impact in the Nx Supply Chain Attack by Rami McCarthy

Something is in the air regarding open-source software supply chain attacks. McCarthy does an excellent job summarizing the s1ngularity security incident, which involved the theft of an npm publishing token via a malicious pull request on GitHub. Users who downloaded the latest version had their secrets stolen; many had their private repositories uploaded to GitHub as public, and a peculiar technique of using AI to identify interesting files was employed.

🔗 Open Source

andrewkolagit/DetectPack-Forge

Neat full-stack application that uses Gemini and n8n to turn natural language into Detection rules. You’ll see many companies offering this type of service, but it’s impressive to see something implemented and shared on GitHub.

Yet another for educational use, post-exploitation, and C2 framework. Their docs site looks promising in terms of how it works, so it’d be good to add this for detection backlogs to check for telemetry.

DeepSpaceHarbor/Awesome-AI-Security

awesome-* list this time for AI Security resources. This list is much more academically focused, but you’ll find a few blogs and code examples in there too.

Unix-like Artifact Collector (uac) is a forensic tool designed to collect breach artifacts across various flavors of Unix and Linux. It’s modular, allowing you to add artifact collections via configurable YAML files. It’s no install, meaning the core functionality is a big ol’ shell file, which means it can run on everything from NAS boxes to IoT devices.

What a great weekly read 🙌 as a beginner in threat hunts and detection engineering I look forward to this reads - so insightful and well curated 👏