DEW #143 - Suppressing False Positives at Scale, Silencing EDRs & Detection Fidelity via Social Network Analysis

snowmageddon has hit the Northeast US

Welcome to Issue #143 of Detection Engineering Weekly!

✍️ Musings from the life of Zack:

New England got hit hard by a snowstorm, and my town alone recorded over 20 inches/50 cm of snow!

I got COVID for the third time in the last 6 years. It definitely was milder, but I can still feel the shortness of breath that I vividly remember from the earlier and more potent strains

If you have 30 mins, check out the blog about Gas Town. It’s written like someone who’s running through an Agentic fever dream, and they managed to wake up with an insane orchestration system that makes you run out of Claude credits in 3 minutes

Sponsor: Permiso Security

ITDR Playbook: Detect & Respond to Non-Human Identity Compromise

Non-human identities are everywhere, and when they’re compromised, attackers blend in as “normal” automation. This ITDR Playbook focuses on detecting and responding to NHI compromise using operational anomalies, not login patterns. Learn how to spot exposed keys, boundary violations, privilege creep, and abnormal service behavior. Plus, get response steps that will contain risk without breaking production.

💎 Detection Engineering Gem 💎

Centralized Suppression Management for Detections Using Macros & Lookups by Harrison Pomeroy

Detection rule efficacy is the practice of curating rule sets that balance precision, recall, and the cost of triage. New detection engineers typically think about rules being the only place you can apply logic to help manage this balance. A more precise query that accounts for benign behaviors, given the tactic or technique, can increase the likelihood of capturing true positives. But there are other capabilities in SIEM technologies and software engineering practices that can perform filtering and suppress alerts in more dynamic, context-aware ways that align with the threat landscape or your environment.

This post by Harrison Pomeroy details the power of Splunk’s macro and lookup table functionality to perform suppression of alerts without re-deploying rules. A suppression is a concept in which detection engineers deploy a capability to dynamically mute alerts, thereby reducing the cost of both false-positive generation and the subsequent need to tune a rule on small fields. It also makes the rule more resilient because it can account for external factors related to benign behaviors, such as known service accounts, scheduled tasks, or internal tooling.

Harrison leverages Splunk’s macro and lookup table features to achieve this.

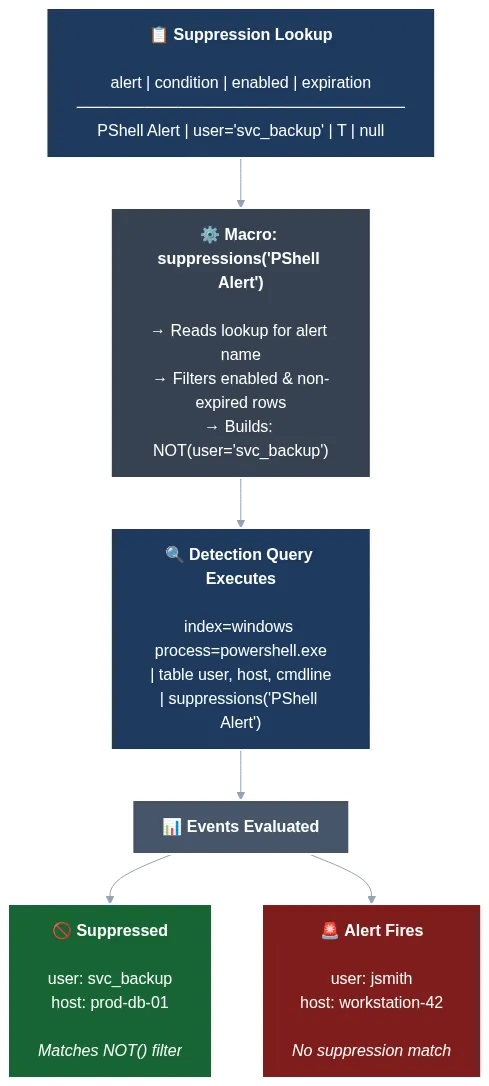

The above Mermaid diagram shows his really clever setup. When you apply macros to each of your Splunk rules, you can start bringing in logic to evaluate whether suppressions are enabled for the rule (the T value), and then specify a lookup table to find additional alert logic to append to your original rule to suppress false positives.

The above example suppresses alerting on any user called svc_backup. The macro executes based on the T value and performs a lookup in a table relevant to the PShell Alert rule. svc_backup is in the table and uses a NOT() filter to prevent an alert if svc_backup is present. The suppressed green box ensures the alert doesn’t fire, and the Alert red box fires because the user is jsmith.

This type of suppression occurs at query time, before the alert is generated. There are other suppressions you can apply before a log hits the index, or after the alert fires. This is a great topic for my Field Manual series, so thank you, Harrison, for the inspiration!

🔬 State of the Art

EDR Silencing by Pentest Laboratories

EDR Silencing has been a super interesting area of research for security operations and threat actors alike. Typically, when a threat actor lands on a victim box and sees an EDR process running, their top priority is finding a way to evade the EDR to avoid detection. They can employ several techniques, such as:

Avoiding EDR detection rules themselves, such as abusing indirect syscalls that EDRs have not accounted for, or using living-off-the-land binaries

Obtaining privileged access and installing kernel modules that circumvent EDR hooking logic, avoiding malicious traffic generation

Uninstalling (!) the EDR

The last bullet above is the most interesting, because it’s so simple. It makes me think of the adage “don’t let perfect be the enemy of good”. EDR Silencing follows the same process because it abuses the same simple-but-effective concept. It focuses on disrupting the network connection between the EDR cloud service and the agent. This network connection hamstrings the effectiveness of the EDR, without necessarily worrying about evasion of logic.

In this post, Pentest Laboratories provides readers with a fantastic survey of the state of the art of EDR Silencing. A huge part of this research relies on obtaining Local Administrator privileges to leverage everything from Windows Filtering Platform APIs to adding blocking entries in local DNS configuration files.

The End of the “Write & Pray” Era in SIEM: Detection as Code and Purple Team Validation by Ali Sefer

This is a clever introduction to the concept of detection-as-code through the lens of Sefer, a SOC Manager. I enjoyed the framing around moving from the “Craftsmanship” era of rule writing to the “Engineering” era. Detection engineers, at their core, should be part security experts, data analysts, and software engineers. This is especially true in Sefer’s day-to-day, where they’ve dealt with analysts who read a threat intelligence report, implement a rule in the SIEM, deploy it, and don’t perform testing.

This really is a post about detection rule governance. It’s important that we implement the boring stuff for detection rules, for the sake of managing costs. If an analyst or detection engineer deploys rules without careful validation, education, version control and testing, then operations teams run a huge risk of false positives and analyst burnout. Sefer brings the reader through an example automated test pipeline, where:

Analysts write rules

Check the rule into version control with syntax validation and linting

Run Atomic Red Team tests to validate the telemetry matches the rule

Deploy the rule into the SIEM

Instill feedback mechanisms to tune the rule

Sefer ends the blog with a real world example where an analyst tuned a rule and the logic failed the validation check with Atomic Red Team. The cool thing here is that it had nothing to do with the detection rule, but with the health of the system itself. Catching log source configurations and matching them with detection logic is just as useful as rule validation itself.

Detection Fidelity & Confidence Framework: Teaching Your SIEM to Score Its Own Homework by Hatim Bakkali

But here’s what I’ve noticed after staring at years of notable event data: detections don’t fire in isolation. They have patterns. They have Friends. And those Friendships tell us something important about fidelity and confidence.

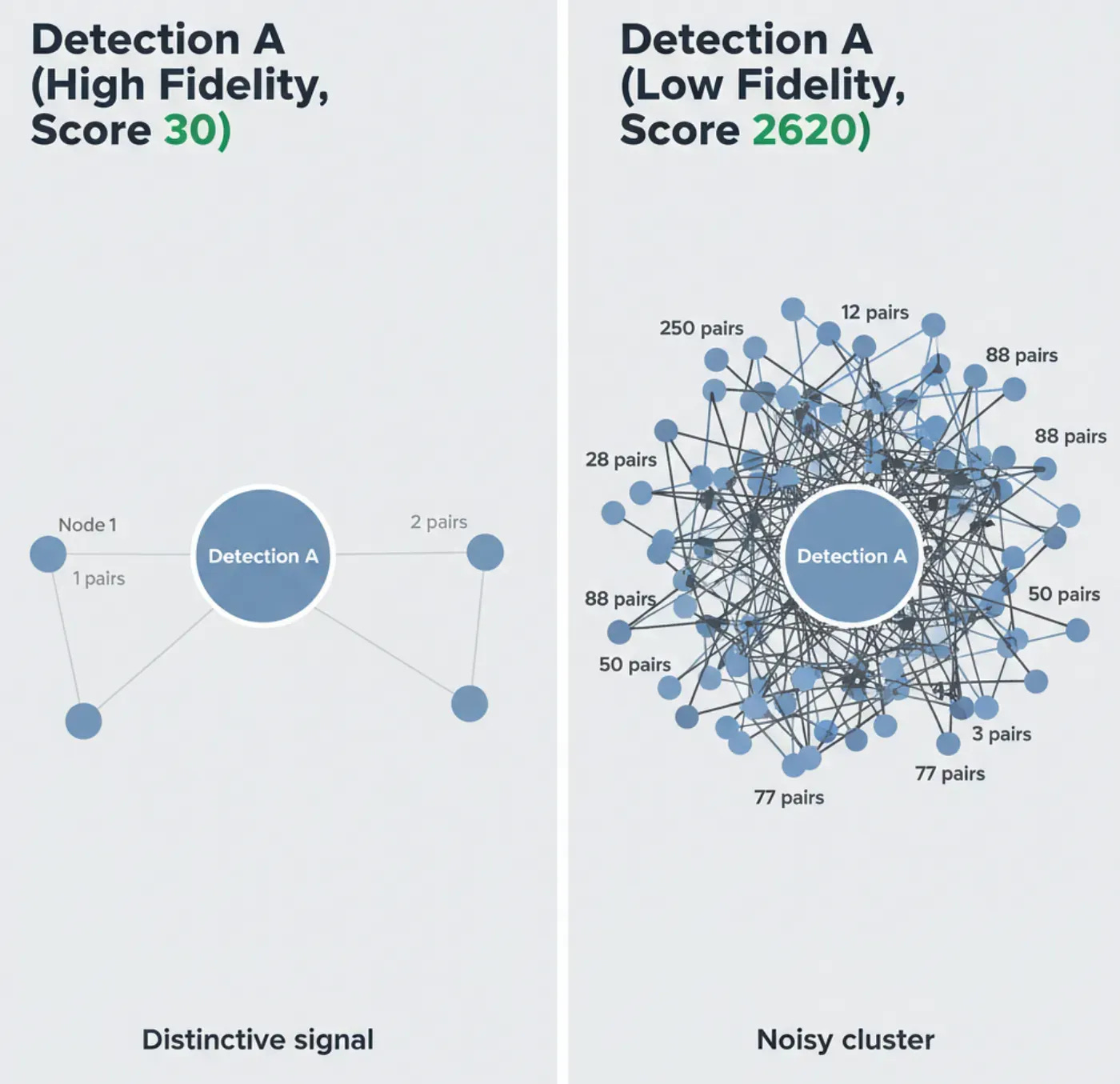

This post is a deep dive into a new framework for measuring detection fidelity and confidence. Rule efficacy is like a garden; it requires constant curation and mindfulness of how you build and maintain detection rules. Bakkali’s approach is more math-heavy and academic but built from practical experience. The concept is around measuring the co-occurrence of alerts with other alerts, similar to how social networks create edges between friends and followers for suggestions.

The equation binds to an entity, much like Risk-Based-Alerting, and Bakkali says it should complement RBA rather than replace it. Their framework calculates two scores based on confidence and fidelity.

Confidence: scores pairs of alerts based on how often they co-occur within a time window

Fidelity: aggregates those pair scores to a detection-level “noise accumulation” score. The lower, the better

They provide a ton of examples and walkthroughs, along with SIEM-agnostic pseudocode, for readers to try themselves. There’s a bake-in period to measure these over time before you can start using them, but it’s a clever approach for a few reasons.

First, it’s an elegant addition to RBA because it’s still technically a GroupBy to an entity, but it starts looking at pairs of alerts rather than aggregating. This leads to my second point: any type of expert model, such as applying arbitrary scoring mechanisms to alerts, runs the risk of poor model validation. You need to redeploy these models every time you update your scores, which results in profound changes and creates more work. That risk exists here, but it tends to preserve relationships of the pairings, making it easier to understand changes.

Introducing IDE-SHEPHERD: Your shield against threat actors lurking in your IDE by Tesnim Hamdouni

~ Note: I work at Datadog, and Tesnim is my colleague ~ I’m super excited to post this because it was Tesnim’s internship project, and she now works at Datadog and is releasing it to the world! IDE-SHEPHERD is an IDE extension that helps prevent malicious extension installation, an emerging attack vector over the last year. The cool part of this extension is that it generates telemetry from the extension manifest for reporting and threat hunting, in addition to runtime monitoring.

It has runtime and heuristic detection capabilities. At runtime, it’ll shim Node functions that attempt to spawn processes, detect and block malicious commands, and perform network monitoring. The heuristic functionality analyzes metadata related to extensions and checks for poor developer practices, metadata anomalies, and hidden commands.

From Static Template to Dynamic Forge: Bringing the DCG420 Standard to Life for the Detectioniers by DCG420

DCG420, who wrote and released the Detection Engineering Template, has just launched a platform that serves as a workbench for detection engineers. It has an AI backend to help visualize attack flows, measure coverage and write rules. The intel analyst within me got really excited reading about their Analysis of Competing Hypothesis feature, which combines their tool and LLMs to generate competing hypotheses against your detection rule candidate. This helps check for bias and identify detection engineers who may be stuck in a rabbit hole, trying to get a rule out without considering other options.

The Indirect Realism of Threat Research by Amitai Cohen

This is an excellent commentary by Amitai on information asymmetry in threat research. We tend to (rightly) dunk on large cybersecurity companies as they create, update and hype their lexicon of APT and cybercriminal names. But, the very good ones do this for a reason: they have a lens in which they see threat activity, and they group it within their unique lens because no one else has the visibility that they do.

This bias is ever-present in security operations and detection engineering, where, according to Cohen, we become convinced that what we can measure can capture what threat actors generate. By making sure we check this bias, understand that information asymmetry exists, and obsessing over what you are missing, you can feel more confident that you are addressing gaps on an ongoing basis.

☣️ Threat Landscape

Who Operates the Badbox 2.0 Botnet? by Brian Krebs

In the latest saga of the Kimwolf botnet, it looks like the botnet's operators broke into a rival Chinese-nexus family dubbed Badbox 2.0. The admins of Kimwolf, “Dort” and “Snow”, managed to post a screenshot of the crew taking over a control panel that manages and deploys Badbox. The evolution of these botnets has recently moved away from traditional DDoS-style attacks to operating and selling access to residential proxy networks.

Krebs managed to pull an email address from the “proof” screenshot and worked his way into finding an identity. Email re-use and operational security still seem to be issues for threat actors, and it shows how one screenshot can pull the attribution thread all the way to a full identity.

A Shared Arsenal: Identifying Common TTPs Across RATs by Nasreddine Bencherchali & Teoderick Contreras

This research by Splunk’s threat research team is a survey of 18 infostealer malware families mapped to MITRE ATT&CK TTPs. The emergence of these infostealer families tends to revolve around criminal groups splitting, source code getting sold and leaked, and conversations with each other on criminal forums.

The interesting finding here is how 6 out of the 18 malware strains leverage legitimate services for their command & control infrastructure. So it’s not the worst detection opportunity to alert on anomalous traffic heading to places like GitHub, social networks, Discord, or Steam.

OpenSSL 3.6 Security Release with Vulnerabilities: 10 Vulnerabilities by OpenSSL

OpenSSL had a fairly large security release with around 10 vulnerabilities disclosed. One vulnerability who had a “High” severity rating, CVE-2025-15467, caught my eye because the title started with a stack-based buffer overflow. These theoretically can lead to remote code execution, and since OpenSSL is a security technology that underpins the Internet, I thought it would be worth to call this out.

Kubernetes Remote Code Execution Via Nodes/Proxy GET Permission by Graham Helton

This is a super interesting vulnerability writeup where the (mis)configuration was known for a long time, but a new nuance in the configuration made it much worse. Basically, Helton found a valid Kubernetes configuration that allowed authenticated attackers to access an API that serves as a “catch-all” and proxies potentially dangerous requests to the internal control-plane API for Kubernetes, called the Kubelet API.

By using a WebSocket connection to nodes/proxy with the GET verb, Kubernetes proxies the request to the Kubelet API, and it doesn’t respect its internal configuration that only allows CREATE verbs for the exec command, enabling remote code execution. Helton discovered 69 Helm Charts of well-known vendors using this configuration. The best part? There is no audit logging you can use to detect this!

Here’s the relevant snippet from Helton’s blog:

This should mean consistent behavior of a

POSTrequest mapping to the RBACCREATEverb, andGETrequests mapping to the RBACGETverb. However, when the Kubelet’s/execendpoint is accessed via a non-HTTP communication protocol such as WebSockets (which, per the RFC, requires an HTTPGETduring the initial handshake), the Kubelet makes authorization decisions based on that initialGET, not the command execution operation that follow. The result isnodes/proxy GETincorrectly permits command execution that should requirenodes/proxy CREATE.

🔗 Open Source

DataDog/IDE-Shepherd-extension

IDE extension from Tesnim’s research listed above in State of the Art.

Satguard is a Starlink telemetry detection & analysis framework to detect and visualize satellite attacks. You specify Starlink debug logs, and it’ll use a combination of static rules and anomaly detection to detect spoofing and jamming attacks and measure health of a signal.

Security rules and best practices for defending MCP servers. It’s structured super well, and has markdown reports with detailed examples, compliance mappings, example vulnerable and secure code and references. Would be great to feed this into an LLM and check for vulnerabilities as people push code to an MCP server repository.

PoC MCP server that demonstrates how a malicious MCP server can hijack your local LLM CLI to perform four separate attacks:

Tool shadowing: convince your local LLM that this is the preferred tool, and perform prompt injection to take advantage of queries and responses

Data exfiltration: hijacks a prompt and exfiltrates it over the tool for further analysis

Response injection: injects “hidden instructions” in other tool responses to manipulate behavior

Context window flooding: DDoS the context window of the prompt which can render models with smaller context windows unresponsive

Local MCP server that exposes tools to connect to API documentation across GitHub, npm, GoDocs and several others. This is helpful to run if you want to run agents locally and you don’t want them to hallucinate while they make up strategies that doesn’t match documentation, or you want them to use the most up-to-date documentation without trying to search the Internet.