DEW #141 - K8s Detection Engineering, macOS EDR evasion, Cloud-native detection handbook

Happy New Year! Did you miss me?

Welcome to Issue #141 of Detection Engineering Weekly!

✍️ Musings from the life of Zack:

It was a long but restful month away from you all! I can’t wait to get back into writing every week for y’all

🤝 I am accepting new sponsors for 2026! If you are interested in sponsoring the newsletter, shoot me an email at techy@detectionengineering.net. We are already almost halfway booked for Primary slots and now have Secondary slots so you have options!

I’ve started writing again for the Field Manual and I really love encapsulating my experience and knowledge into these posts. If you have ideas for Field Manual posts, comment below. I have my latest post below as the last story under State of the Art

This Week’s Primary Sponsor: Push Security

Want to learn how to respond to modern attacks that don’t touch the endpoint?

Modern attacks have evolved—most breaches today don’t start with malware or vulnerability exploitation. Instead, attackers are targeting business applications directly over the internet.

This means that the way security teams need to detect and respond has changed too.

Register for the latest webinar from Push Security on February 11 for an interactive, “choose-your-own-adventure” experience walking through modern IR scenarios, where your inputs will determine the course of our investigations.

💎 Detection Engineering Gem 💎

A Brief Deep-Dive into Attacking and Defending Kubernetes by Alexis Obeng

For detection engineers, incident responders, and threat hunters who operate in a cloud-first environment, you probably heard developers in your organization talk about Kubernetes (k8s for short). It’s an extremely popular container orchestration framework that has been used as the de facto standard for controlling scaling, application isolation, and cost. Whether you have it in your environment or you’ve never worked with it, it’s important to note how important the security controls and detection opportunities work inside these environments, because it’s like an operating system of its own.

When Obeng first shared this research on a Slack server I was on, I was excited to read it because it’s truly a deep dive into Kubernetes security, as the title suggests. She started the blog by describing how unfamiliar this space was, and by the end, you could tell Obeng had become very familiar with detection and hunting scenarios in Kubernetes.

The blog starts with an introduction to k8s and breaks down the jargon, architecture, and nuances of how a Kubernetes environment operates. The most important thing I try to get folks to understand with k8s is that it’s separated into two detection planes. The control plane, as Obeng explains, “is the core of Kubernetes.” It helps control everything from scaling plans, what containers to run, permissions, and health checks.

The other plane, the data plane, is everything else. The hyperscalers describe this as the service’s core functionality. Since k8s’ functionality revolves around running containers, you could argue that it’s about each individual container and the isolation of those containers within k8s.

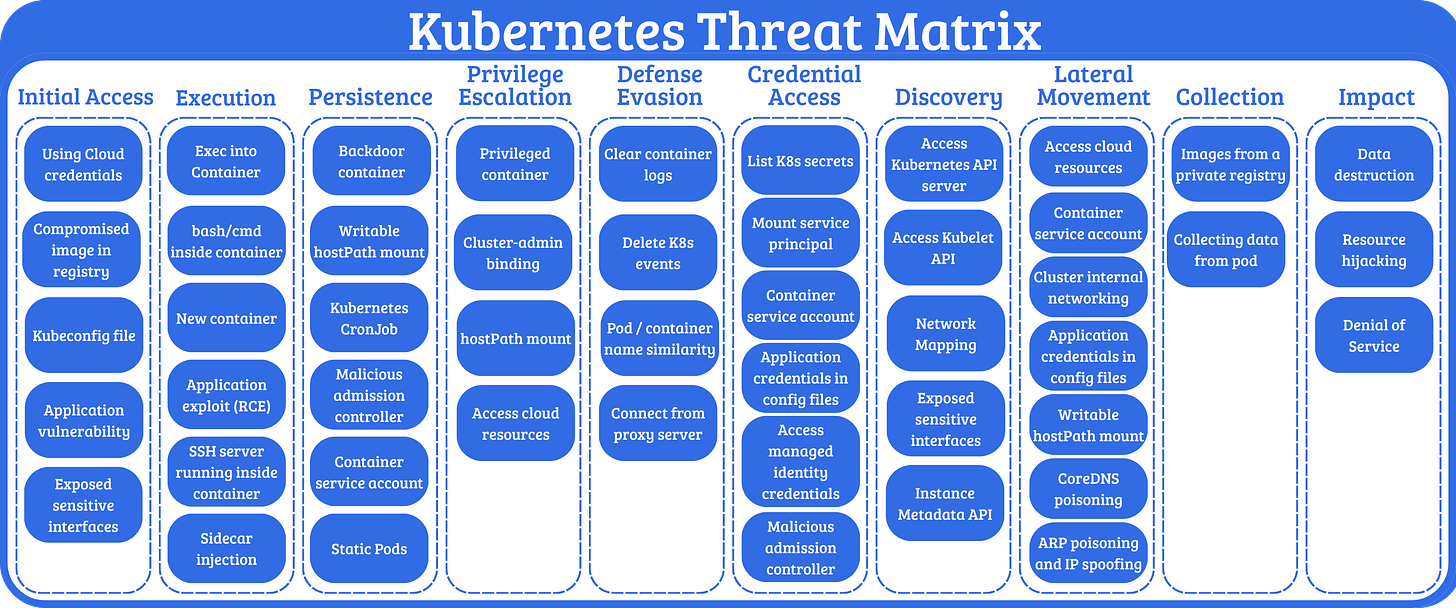

As you can see from the threat matrix, attacks along MITRE ATT&CK operate in both planes.

After giving this introduction, she jumps into several attack scenarios. But the start of this scenario section first describes her description of the k8s attack surface. This is my favorite part of the blog. Obeng outlines four major scenarios you’ll see in any k8s attack: pod weaknesses, identity and access mechanisms, cluster configuration, and control plane entry points. Notice these are focused on the control plane as the end goal. So, if you can compromise any part of the data plane, for the most part, the main goal is to attack the control plane afterward.

She ends the blog with close to 10 attack scenarios, detection rules using Falco, and a follow-up with her lab for folks who want more hands-on learning.

🔬 State of the Art

EDR Evasion with Lesser-Known Languages & macOS APIs by Olivia Gallucci

~ Note, Olivia is my colleague at Datadog ~

EDR blogs from independent researchers are hard to find. It’s not that the blogs are tucked away in dark corners of the Internet, instead, EDR researchers who don’t work at vendors are few and far between. So, anytime I get to see research that goes deep into the EDR space, I pay close attention.

This is especially true for the macOS world. Microsoft has years of security solutions and a litany of researchers who document all kinds of peculiar malware and EDR behavior. This is logical, since most major security incidents over the last 30 years have been on Windows platforms. But in the last few years, attackers have shifted their focus to macOS. The opaqueness-by-design of EDR vendors AND Apple makes it hard to learn about security internals on this platform.

This technical analysis by Olivia helps break down those barriers by first describing the ecosystem of opaqueness of macOS combined with security vendor technologies. From my understanding (and with lots of stupid questions from me to Olivia), rely on the extended security (ES) system, which is somewhat equivalent to Linux’s eBPF observability and security framework. Security vendors subscribe to security events, build detections over them, and implement EDR security response features, such as blocking a piece of malware from executing.

This has its limitations, and Olivia’s analysis under her “Technical Analysis” section points them out. It’s reminiscent of the early days of Microsoft security, when bypasses emerged from malware families, and it took a lot of effort for vendors and Microsoft to respond to them. The closed ecosystem has it’s advantages from a security controls perspective, but IMHO, it starts to do a disservice to organizations when attackers move faster than the controls you try to implement.

The Cloud-Native Detection Engineering Handbook by Ved K

This post is an excellent follow-up to Abeng’s blog, which is under the Gem at the top of the newsletter!

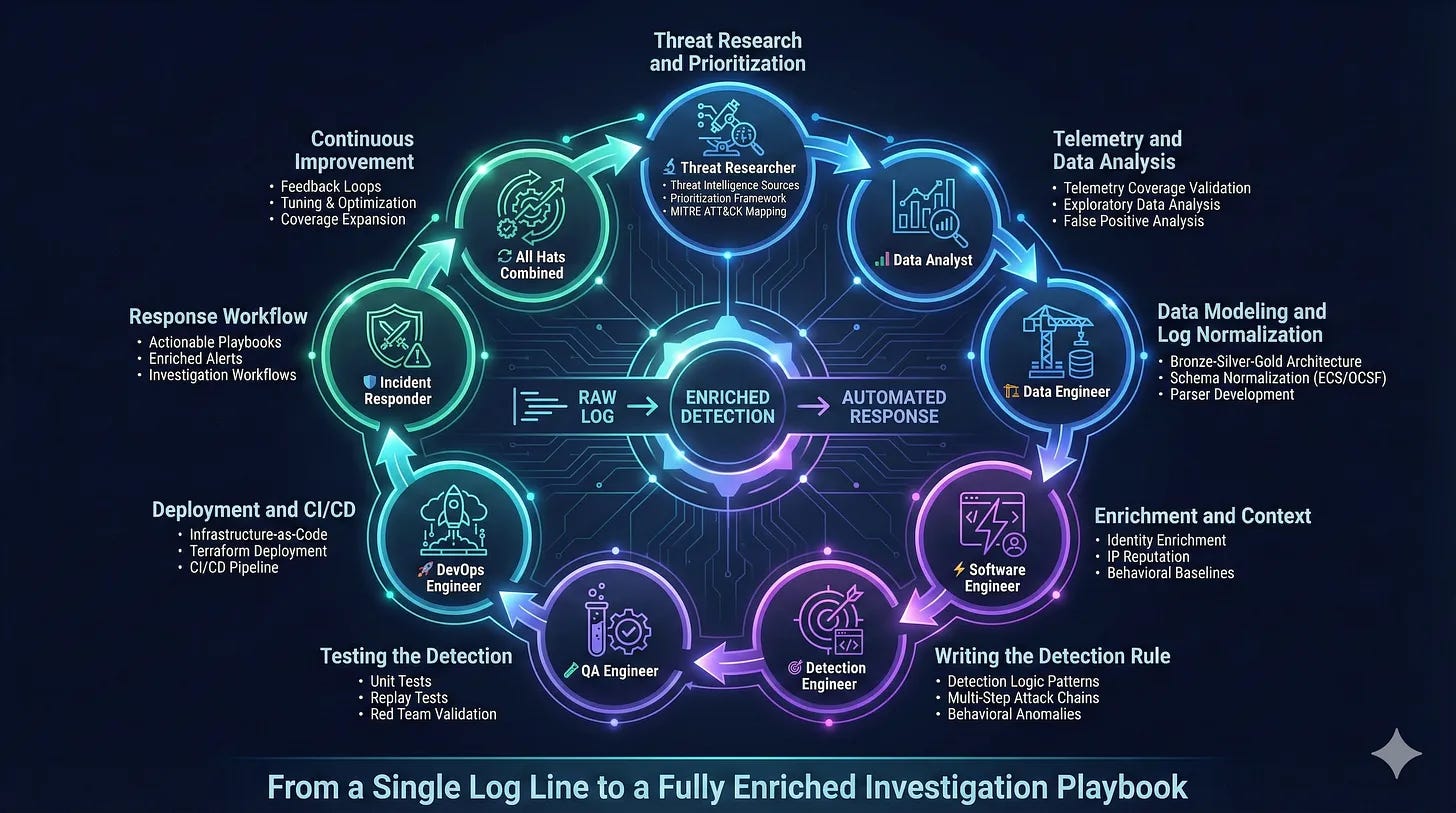

Detection engineering is much more than building detection rules. There are elements of software engineering, data analysis, and threat research that separate a good detection engineer from a great one. I’ve talked about this across my publication, podcasts and conference talks. But, if you want a deep dive on the how to wear and implement these skillsets, Ved’s blog is a great resource to do so.

Ved defines cloud-native detections as any research, engineering and implementation of a detection rule to identify threat activity in cloud environments (AWS, Azure, GCP) and Kubernetes. He then describes his nine-phase (!) approach to writing detections, and opens each subsection with what “hat” you should be wearing.

The value of this post lies in the diligence put into each phase, especially in the use of real-world examples. They are bite-sized sections so that I wouldn’t be phased (ha!) out by the number. It serves more as a handbook for you to reference as you move through the detection lifecycle.

My favorite section is under Phase 4, titled “Enrichment and Context.” It ties nicely with my piece about context and complexity within rules, and according to Ved, it does require a Software Engineering Hat. Ved lists out five critical pieces of context to help increase the efficacy of rules:

Identity Context: who is this (human) or what is this (service-account).

Threat Intelligence: what IP addresses, domains, or general knowledge around indicators of compromise do we have to help make decisions on this activity?

Resource and asset metadata: What critical asset inventories, compliance tags or posture related information exists to help identify the riskiness of this asset being attacked?

Behavioral baselines: is this normal behavior for this type of activity? Think Administrator activity at 2am on Saturday.

Temporal context: Attacks aren’t point-in-time, they are over a period-of-time. Can you enrich this alert with other context of events before it occurred?

Ved finishes the rest of the post, writes a detection, tests it, follows it through deployment, and sees how useful the alert is. It looks like this is his first post on his Substack, so I recommend subscribing!

How to defend an exploding AI attack surface when the attackers haven’t shown up (yet) by Joshua Saxe

This is a fantastic commentary on what happens when the security community knows that a new technology is going to bring all kinds of security issues, even though the issues haven’t materialized yet. Saxe’s framing revolves around the growing attack surfaces around AI technologies. It’s hard to parse marketing-speak and LinkedIn ads and messages from startup founders and salespeople claiming that “the bad guys are already using AI at scale to attack you!!11” without much proof. Perhaps they reference a news article about some basic usage of vibecoding malware, or a phishing site that has an HTML comment of “created by Claude Code.”

Saxe has recommendations around what security functions and specific teams can do to help prepare for this, and I will steal his framing around making controls and policies “dialable”. Security should aim to be enablers rather than disablers for our engineering and technology counterparts. So, build controls in security engineering, and implement detection & response processes, but configure them in a way so you can “dial up” the strictness as we see new attacks emerge from real scenarios rather than theoretical ones.

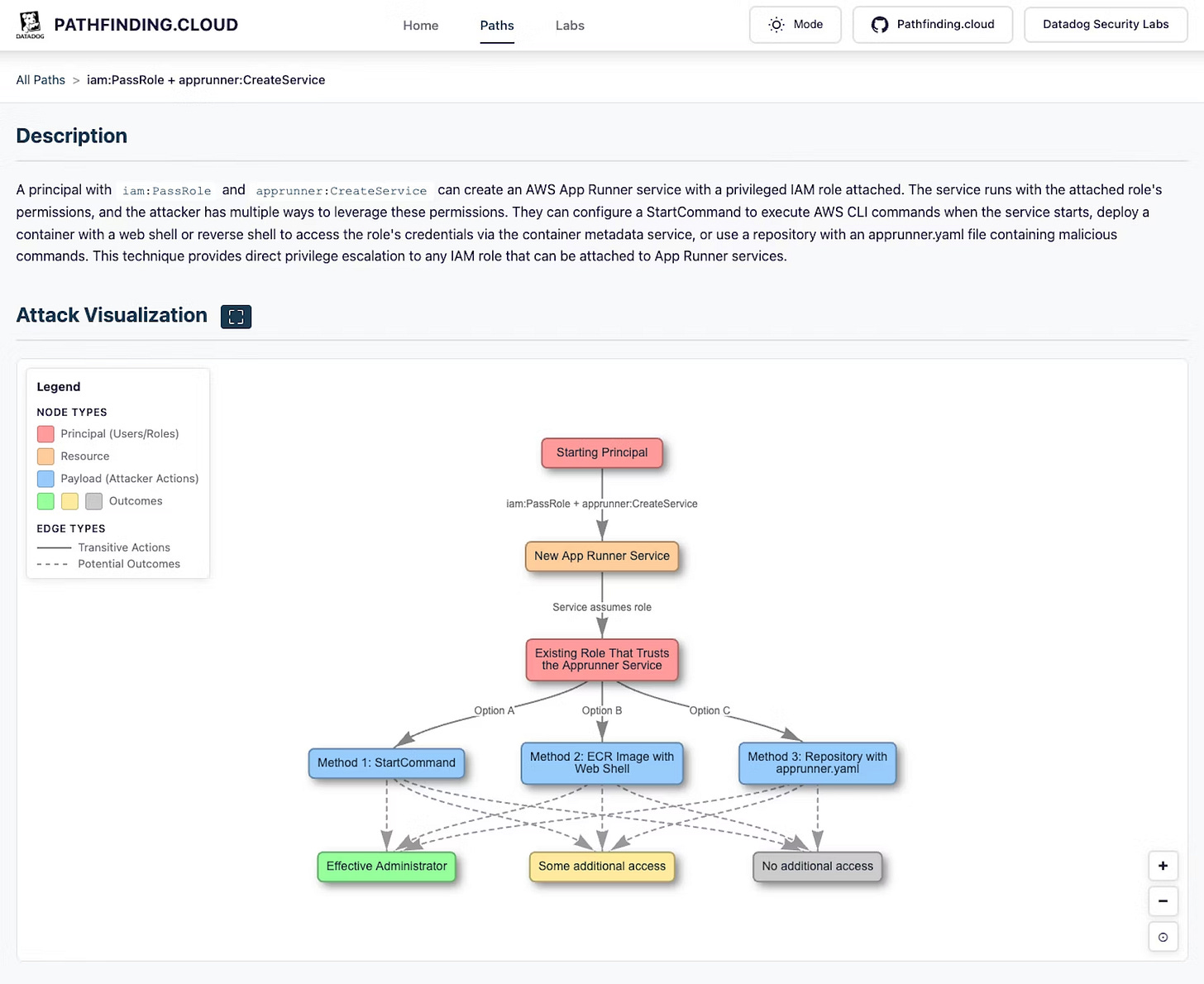

Introducing Pathfinding.cloud by Seth Art

~ Note, Seth is my colleague at Datadog ~

Seth recently released a comprehensive library on privilege escalation scenarios and techniques abusing IAM in AWS environments. There are 65 total paths, and 27 of them are not covered by existing OSS tools to test coverage. That good news is that the website has the description of each attack and how to perform it, as well as a helpful graph visualization so you can see the traversal rather than try to create an image in your head.

📔 Field Manual

I wrote a Field Manual issue on Atomic Detection Rules over break! Please go check it out!

What are Atomic Detection Rules?

In the last post, we discussed the tradeoffs in designing effective rules. Detection efficacy captures the needs of the consumer of your detection rules, because the persona can be more concerned with missing an alert (false negative) or having too many alerts that don’t matter (false positives).

☣️ Threat Landscape

The Mac Malware of 2025 👾 by Patrick Wardle

This blog is a comprehensive look back at Mac Malware incidents and research throughout 2025. Maybe I am showing my age, but if you told me 10 years ago that macOS’s popularity is going to explode in cybercriminal groups, leading to large scale compromises, I would laugh at you. Wardle lists out the top malware families, some associated incidents and blogs dissecting the malware, as well as walk through analysis of the malware using an open-source toolbox.

Researcher Wipes White Supremacist Dating Sites, Leaks Data on okstupid.lol by Waqas Ahmed

lmao

🌊 Trending Vulnerabilities

MongoDB Server Security Update, December 2025

I’m a bit late on this one due to holidays and time off, but MongoDB recently disclosed a critical vulnerability dubbed “MongoBleed” under CVE-2025-14847. It allows an unauthenticated attacker to connect to a MongoDB instance and leak memory contents, which potentially contain sensitive information around data inside Mongo, authentication data and cryptographic data.

I’m impressed with the transparency and diligence in the post. MongoDB found the vulnerability internally, validated it, built a patch, notified customers and rolled out a post. A researcher at Elastic published a PoC two days later (on Christmas, no less) that I’ll link below.

Ni8mare - Unauthenticated Remote Code Execution in n8n (CVE-2026-21858) by Dor Attias

n8n is an open-source workflow framework to build Agent-to-Agent systems. They recently disclosed two vulnerabilities, CVE-2026-21858 and CVE-2026-21877, a 9.9 and 10.0, respectively. n8n itself has skyrocketed in popularity primarily due to it’s ease of use for interfacing with Agentic workflows and platforms. The .1 difference is 21858’s arbitrary file read, which could allow reading secrets from a target system, and full remote code execution on 21877.

I really enjoyed the technical detail of this post by Attias, focused on the arbirary file read vulnerability. When you think of arbitrary file reads in a modern application stack like n8n, you can pull a lot more credentials that give you access besides dumping password files. Attias created a clever scenario on reading in arbitrary sessions and loading it into n8n’s knowledge base, allowing the extraction of the key from the chat interface itself.

🔗 Open Source

heilancoos/k8s-custom-detections

Kubernetes lab environment and corresponding detection rules from Obeng’s gem above.

appsecco/vulnerable-mcp-servers-lab

Hands-on lab for testing security vulnerability knowledge against MCP servers. There are nine scenarios, and each one looks pretty reasonable in their real-world applicability. You’ll need Claude and python to run each one, and luckily with MCP, you can specify the singular Python file within the Claude config and get everything you need to get started.

Tailsnitch is a posture management tool for Tailscale configurations. You give it a Tailscale API key and it’ll connect to your tenant’s API and compare it’s configuration to secure baselines.

Original PoC of CVE-2025-14847, a.k.a MongoBleed, dropped right on Christmas :|. Has a docker-compose file so you can safely test it yourself.

This is a nice example of what I think will be a normal detection and response engineer’s setup in the next few years. Your org will operate a repository with agent setups for technology like Claude code, and it’ll contain a standardized list of MCP servers to use and agent instructions. Making it extendable to tweak or add agents and MCP servers should be as easy as another prompt and some glue work for a custom MCP.

Solid newsletter. That k8s blog by Obeng is exactly what security folks need - the attack surface breakdown into pod weaknesses, IAM, cluster config, and control plane entry points is money. We've been wrestling with the control plane vs data plane detection seperation for months. The "dialable controls" concept from Saxe is smart to, fits well with how we're approaching AI risk without blocking everything on day 1.