DEW #133 - Redefining Security Visibility, TTP-First Hunting & F5 breach

we should define a standard to unite all standards

Welcome to Issue #133 of Detection Engineering Weekly!

✍️ Musings from the life of Zack in the last week:

I did a family road trip for the long weekend to my hometown. I’m happy to report to other parents that I’ve had my first experience of a kid throwing up in the backseat. Do I earn a badge of honor here?

Datadog Detect is BACK for round 2, so please sign up and see some excellent Detection Engineering talks! It’s free, fully remote, and there will be activities (yay!) and labs for conference goers.

⏪ Did you miss the previous issues? I’m sure you wouldn’t, but JUST in case:

💎 Detection Engineering Gem 💎

What Does “Visibility” Actually Mean When it comes to Cybersecurity? by David Burkett

The most frequent question I get from my boss at Datadog is “Are we covered?” It’s a simple question, but it’s extremely hard to answer. What does covered mean? Are we covered now, before, or in the future? Do you mean MITRE rule mappings, operational maturity, incident readiness, or threat intelligence awareness? It turns out that agreeing on a singular definition of anything in security is difficult!

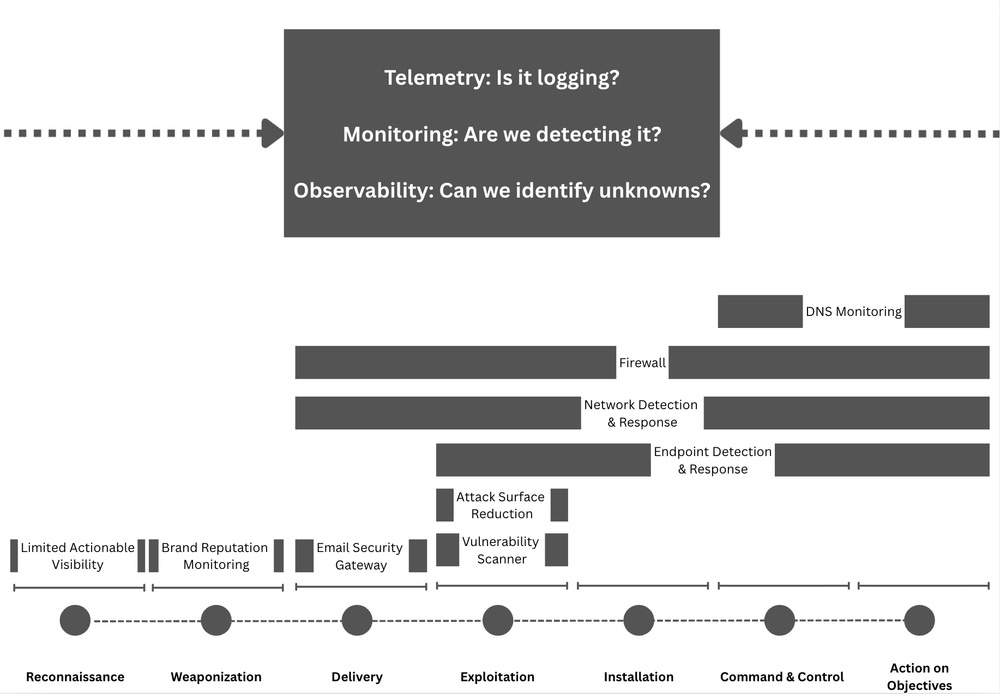

It was nice to read Burkett’s post here discussing the varying definitions of visibility. Like most industry standards, several companies and organizations have attempted to define visibility, but no single standard or definition has emerged as the true winner. David adapted Splunk’s blog on observability into the security operations space, and I think it works beautifully:

Visibility is the holistic state wherein a system generates telemetry, is subject to robust monitoring for known conditions, and possesses observability, enabling deep, exploratory analysis to diagnose novel problems. Full visibility is achieved only when these three elements are cohesively integrated, allowing operators to move fluidly from detecting a known issue (monitoring) to exploring its unknown root cause (observability), all supported by a common foundation of high-quality data (telemetry).

He then fits this mental model into a 3 tiered definition based on who is asking about visibility. The three tiers look like they are inspired by tiered types of threat intelligence: strategic, operational and tactical. This is also a great approach because visibility means something different based on the customer you are talking to.

Senior leaders typically care about the full visibility of the business, not necessarily the individual elements along the ATT&CK chain. When you get to operational, you focus on the attack surface, such as endpoint, network, and SaaS. Each one of these attack surfaces can have many telemetry sources, think EDR and Secure Web Gateway for domain visibility. Lastly, he rounds out tactical visibility by examining specific telemetry sources, like EDR, and moving through MITRE ATT&CK to assess visibility in each stage.

All models are wrong; some are useful. This may not be “perfect” in terms of defining visibility, but in my opinion, it’s a good mental model. It pulls inspiration from SRE concepts like observability and fits that into the context of a security program’s healthiness based on the customer who is asking.

🔬 State of the Art

Hunting Beyond Indicators by Sam Hanson

Threat Hunting is the art of managing false positives. The basic idea is that you switch the premise of triage. Detection engineering and hunting means you want to cast a wide net in your queries to find needles in a haystack, but in the former, you want as little hay as possible. Maybe I can keep this imagery going and talk about separating wheat from chaff?

Alright, alright, enough farming analogies. I included this post because it shows the tradeoffs of hunting when starting with threat intelligence indicators versus adversary TTPs. When you plan and execute a threat hunt, the expectation is to find many results and have time to sift through them, using down-selection techniques to determine if there is an intrusion. The order of down-selection matters, though. According to Hanson, you want to start with tactics and techniques first (which I agree with), and then filter by other components like threat intelligence indicators.

If you start with threat intelligence indicators, you introduce a selection bias because they are brittle selectors and, by nature, won’t catch unknown IOCs. Focus on TTPs first, down-select to find unknown IOCs, and feel free to use IOCs after for additional enrichment.

Intuition-Driven Offensive Security by Andy Grant

When I first started working in security, becoming a red-teamer or a pentester felt like a class of jobs reserved only for the most technical experts in the field. There’s something beautiful in deconstructing assumptions of systems, building tools to probe those assumptions for weaknesses, and then exploiting those assumptions to achieve that objective. At the time, I was only aware of jobs at consulting firms that had intense interview processes, so I never felt I could make it.

As I progressed in my career, I started to meet and work with red teams. They typically fit into a mold where they engage and produce a report. As a blue teamer, it was hard for me to understand the value of a report when the engagement with that same team stopped after the delivery. I think this was the same feeling that some other companies felt after engaging a pentesting firm. The hard work started with the findings, not the engagement.

Grant visits this concept and provides a better working model for red teamers that he dubs as intuition-driven security. The three principles he lays out focus on understanding the risk behind an implementation rather than hunting and reporting bugs. IMHO, this is a much sounder approach because it forces red teamers to think like a security engineer rather than a pentester. If the outcome is risk reduction, the incentive structure rewards knowledge of the engineering behind a service. This knowledge drives empathy of the problems the service solves and serves as a forcing function on closing the security gaps the team finds during an engagement.

Practical Resources for Detection Engineers. || Starters 🕵🏻 and Pro || by Goodness Adediran

I love reading “Introduction to Detection Engineer” posts because you get a good diversity of thought around how to break into the field. Some folks focus on the expertise required to break into it, but can leave it vague enough to make it easy to retrofit into your life situation. Others may look at more tactical details like technologies to learn, such as SIEMs or languages. Adediran took an approach that I first saw from Katie Nickels’ in her series on self-studying for Threat Intelligence.

This post provides a self-study roadmap for readers who want to break into detection engineering. Adediran splits this up into foundational blogs on the subject, studying MITRE to get a better understanding of how it maps to rules, and then crescendos out to specialist subjects across several mediums like blogs, videos, books, open-source repositories and podcast episodes.

Purple Team Maturity Model: From Chaos to Controlled Chaos by Silas Potter

I’m a big fan of maturity models, because they set a clear direction and roadmap for a program or function, but leave enough wiggle room to add, remove, or change milestones to fit your business context. In my professional experience, they’ve helped me set a tone for reporting maturity to leadership and provide an excellent north star for folks reporting into my org. So, when a new “maturity” model pops up in my feed, I almost always read it and steal ideas to use for my own purposes :).

Purple Teaming is an excellent way to improve the operational robustness of your detection program, so I was pleased to see Potter’s approach here to quantify how to achieve a well-oiled purple teaming function. Notice that this isn’t about a specific team doing purple teaming; instead, it’s a program across multiple teams, the obvious one being the joining of red and blue teams. I like this approach because it helps unite two teams who may not be talking to each other and showcases the value of both functions by driving detection outcomes rather than churning out rules or red team reports.

☣️ Threat Landscape

K000154696: F5 Security Incident by F5

Network and security appliance F5 posted a harrowing security incident update involving a “highly sophisticated nation-state threat actor.”. This threat actor had long-term access to their product development environment, and according to cvedetails, F5 has close to 300 products. With the ability to download code and knowledge bases, a well-resourced actor could use that access to do product research and reverse engineering for competitive products in their home country or for the ease of vulnerability research.

Securing the Future: Changes to Internet Explorer Mode in Microsoft Edge by Gareth Evans

The Microsoft Edge security team installed a new secure-by-default configuration for Internet Explorer Mode in Microsoft Edge. This is the first time I’ve heard of Internet Explorer Mode, and I already had a chuckle reading this because I had a feeling it had to do with active exploitation of legacy Internet Explorer code shipped inside Edge, and voila!

The team seemed to plug the holes of some of the exploit vectors, but they switched off certain UI elements by default to limit the blast radius of threat actors abusing the backward-compatible technology. Basically, if you have to use this mode, it’s shipped with minimal functionality to access the resources you need, and an administrator must turn on any additional functionality.

Rubygems.org AWS Root Access Event – September 2025 by Shan Cureton / Ruby Central

Long-lived access key security incidents strike again! Cureton, the Executive Director for Ruby Central, published a detailed security incident report after a blog post disclosed to the open source community that a former maintainer had production access to Ruby’s AWS account. The blog showed several screenshots and a CLI command that purported the open source maintainer maintained access via an AWS Access Key.

In response to the post, the Ruby Central team performed a series of containment actions to remove this access, and did not accuse the maintainer of anything malicious. But the post and this incident report show how hard it is to maintain a governance structure for an open-source non-profit that relies on contractors and volunteers to maintain the project.

Singularity: Deep Dive into a Modern Stealth Linux Kernel Rootkit by MatheuZSec

Two weeks in a row, I’ve read some great pieces on modern Linux Kernel Rootkits, so it was nice to see this one looked at a rootkit leveraging ftrace style hooking for its persistence and evasion capabilities. MatheusZ breaks down the source code within the rootkit itself, including the hooking techniques, and highlights some differentiators between this rootkit and others in the space. The attention to detail the rootkit creator put towards concealment of directories, for example, shows how much of a cat-and-mouse game this is.

When you hide a directory, you may not be able to see its name or contents via list commands, but you may leak metadata that a hidden directory exists. For example, if a directory contains three subdirectories and you hide one, ls will show only two subdirectories. However, the parent directory’s link count (visible via stat or ls -ld) would still reflect three subdirectories unless adjusted.

This discrepancy between the visible subdirectory count and the link count is a forensic artifact that can reveal hidden directories. This rootkit accounts for the discrepancy and hooks a function to compute the number of links for backdoored directories accordingly.

🔗 Open Source

This codebase serves the complete application running on rulezet.org. It looks like an open source version of detections.ai that you can host yourself. It pulls in open-source rulesets, and you can use it to manage your own rules via a community-style setup.

ESET’s long-running repository of malware IOCs is based on blog posts and investigations they’ve done over the years. It’s cool to see commits from close to a decade ago. Each subdirectory has a README describing the malware family and contains the associated IOCs.

For the last two or so weeks, KittenBusters has been publishing commits to this repository that detail the operations behind Iran’s IRGC-IO Counterintelligence division. It is split up into “episodes”, and so far, three episodes have been published. It contains sensitive documents and malware code, and it looks like they will start doxxing certain officials in upcoming episodes.

Logging Made Easy (LME) is CISA’s initiative on leveraging open source tools to enable a security operations function on a budget. It uses Wazuh and Elasticsearch, and the target audience is for smaller shops with a small security team or none at all. Probably very helpful for state and local municipalities that CISA works with during incidents.