DEW #132 - Linux Rootkits Evolution, LLM Rule Evals, Oracle 0-day exploitation

༼ つ ಥ_ಥ ༽つ noo all my rootkits are obsolete ლ(ಠ益ಠლ)

Welcome to Issue #132 of Detection Engineering Weekly!

✍️ Musings from the life of Zack in the last week

I spent the weekend hiking in the White Mountains in New Hampshire with my family. Turns out hiking is much harder when you have to carry kids who are strapped in a backpack

I got excited for a new season of The Amazing Race, and all of the competitors are from a separate reality show?? It’s not good

I’m staying away from all discussion around Tayler Swift’s new album

⏪ Did you miss the previous issues? I’m sure you wouldn’t, but JUST in case:

This week’s sponsor: Material Security

No More Babysitting the Security of Your Google Workspace

While your employees communicate via email and access sensitive files, Material quietly contains what’s lying in wait—phishing attacks in Gmail, exposed Drive files, and suspicious account activity. Agentless and API-first, it stops attacks and triages user reports with AI while running safe, automatic fixes so you don’t have to hover. Search everything in seconds, stream alerts to your SIEM, and audit with detailed access logs.

💎 Detection Engineering Gem 💎

FlipSwitch: a Novel Syscall Hooking Technique by Remco Sprooten and Ruben Groenewoud

I first cut my teeth on writing malware when I was the red team captain at my alma mater’s yearly cybersecurity competition. I took a special interest in writing malware for Linux for several reasons. It was a special combination of operating systems knowledge and nuanced differences between kernel versions and Linux distros. It also felt harder than Windows in peculiar ways. For example, Windows is extremely good at backwards compatibility, so writing a piece of malware that interacts with the Kernel in all kinds of ways stays consistent between versions. Whereas in Linux, a single Kernel version update can break backwards compatibility with legitimate and malicious software alike.

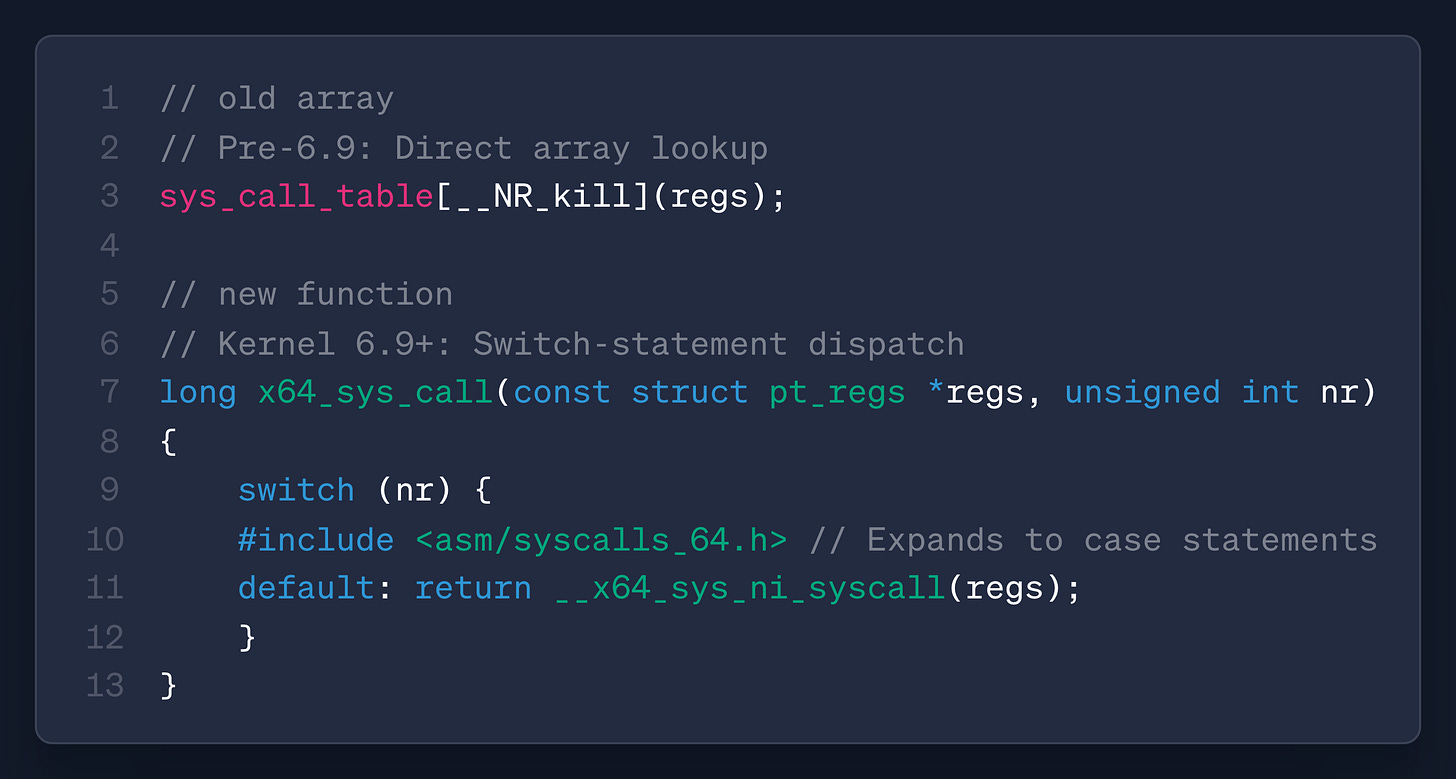

That’s what brings us to FlipSwitch. Elastic Security Researchers Sprooten and Groenewoud did a deep dive on the latest 6.9 version of the Linux Kernel and inspected how changes to an array that stores syscall addresses render a classic Kernel rootkit technique useless. The method relies on hooking addresses in the sys_call_table array to point to attacker-controlled code before trampolining back to the original syscall.

Line 10 is the change that killed rootkits like Diamorphine. This is where flipswitch comes in.

The Elastic team did a fantastic breakdown in their blog, so I’ll give my synopsis. The technique involves searching the running kernel’s memory for the specific opcode associated with syscalls that FlipSwitch wants to hook. This opcode is unique, as in, when you load the malicious Kernel module, you can leverage its privilege to look for 0xe8 , enumerate each offset address for the specific function you want to hook via the new x64_sys_call, then patch it.

It’s pretty elegant, and it shows how a singular protection can kill one class of techniques but open up another class to exploit.

🔬 State of the Art

Bridging the Gap: How I used LLM Agents to Translate Threat Intelligence into Sigma Detections by Giulia Consonni

I’m glad to see more research and homelab-style blogs on how to build detection engineering agentic systems. It demystifies some of the hype surrounding products in this space, and just like Splunk did with SIEM by creating a community edition, it makes it easier for people to enter our field. I immediately clicked on this post because the title really excited me, and the post didn’t disappoint!

Consonni’s project here involves building out an LLM Agent system that translates threat intelligence into detection rules. They leveraged http://crewai.com/ (which I had never heard of), a platform that helps host AI Agents, provides an SDK for writing those agents, and makes it seem easy to focus on building the system rather than worrying about architecture and scale. Consonni started with a prompt that included the whole workflow of “read report → extract TTPs → create rules,” and it did a terrible job due to the broadness of the request. They refined the process with a multi-agent setup, some more specific prompting, and switching foundational models; the resulting rules were impressive.

More than “plausible nonsense”: A rigorous eval for ADÉ, our security coding agent by Bobby Filar and Dr. Anna Bertiger

This post is an EXCELLENT read after the LLM detection rule creator post by Consonni listed above.

Determining the performance of a machine learning model is as old as the field of statistics itself. The basic premise behind performance measurement is building a predictive system, testing it against real-world data, and measuring its performance efficacy. Sound familiar, like detection rules, right?

Naturally, LLMs should have the same type of evaluation criteria for implementers to trust and verify performance. I haven’t seen a comprehensive evaluation framework for detection rules until I came across this post by Filar and Dr. Bertiger. The Sublime team built a detection evaluation framework for their LLM-backed detection engineer, dubbed ADÉ. The idea here is that the team tried to encode success metrics for new detection rules written in the Sublime DSL. These success metrics should be familiar to long-time readers of this newsletter and to those who have read my Field Manual posts.

They split evaluations into three steps: precision, robustness, and cost to deploy and run. The lovely thing about these three evaluations is that they really capture how detection engineers think about testing rules before they deploy them.

Precision measures accuracy and net-new coverage, which, according to Filar and Dr. Bertiger, is the marginal value a rule adds when running alongside existing detections against known campaigns.

The robustness steps dissect the rules’ abstract syntax tree to identify and penalize lower-value detection mechanisms, such as IP matching. Think of this as penalizing the lower parts of the Pyramid of Pain

The cost step looks at how many times the model took to generate a production-quality rule, the time to deployment of that rule, and the runtime cost of the rule in production

They list evaluations of several rules towards the end of the post, and I’m impressed by their performance. They compare the results to a human-written rule, and it appears to have performed well in some detection types against humans but underperformed in others. However, the idea here (in my opinion) isn’t to replace humans, but to augment us, and I think this framework helps achieve that.

How to Create a Hunting Hypothesis by Deniz Topaloglu

The best way to threat hunt is to challenge assumptions. In my experience, these assumptions typically fall into several buckets, including:

Rules that fail to capture threat activity

Telemetry sources contain threat activity that we haven’t accounted for

Threat intelligence informs us of something we should be aware of in the pyramid of pain

Forming a hypothesis, then, takes assumptions and tries to challenge them to uncover gaps in rules or telemetry, and in the worst case, find an incident that you’ve missed. It’s a formulaic process, but this post shows how powerful threat hunting can be when you lay out your assumptions and what you know so you can deep dive into a hypothesis.

Topaloglu starts with a piece of threat intelligence, maps out potential TTPs in MITRE, shows an example network diagram, and then creates a hunting plan. They lay out several scenarios and their corresponding SIEM search queries in several languages, and continue on to post-hunt activities for aspiring hunters to follow up on because threat hunts should provide more value than just confirming whether activity is present or not in a network.

The Great SIEM Bake-Off: Is Your SOC About to Get Burned? by Matt Snyder

Choosing a SIEM is like selecting a business partner. You need to ensure that you understand the strengths and weaknesses of each other and create an operating model to compensate for them. It’s great to see a blog exploring the topic of procuring a SIEM and the pain associated with switching from one deployment to another. This piece is beneficial for aspiring analysts or detection and response engineers who’ve never been through this type of exercise, because it truly feels like a mountain to climb that can put your company and productivity at risk.

Snyder points out five key areas of concern where switching costs can kill productivity: ingest, search, enrichment, rules and administration. SIEM vendors should help you understand each component during a demo. Even then, many demos showcase the best parts of the technology, so a bake-off between SIEM vendors, via proofs of concept, and Snyder’s linked Maturity Tracker, can alleviate much of the uncertainty behind these exercises.

☣️ Threat Landscape

CrowdStrike Identifies Campaign Targeting Oracle E-Business Suite via Zero-Day Vulnerability (now tracked as CVE-2025-61882) by CrowdStrike

The large vulnerability news du jour is a remote code execution in Oracle E-Business Suite tracked under CVE-2025-61882. The CrowdStrike research team made this post detailing their observations as threat actors and researchers alike conduct mass exploitation to take advantage of the vulnerability.

The exploit chain involves a series of crafted payloads to two jsp endpoints, where an unauthenticated attacker uploads a malicious xslt file. This, in turn, creates an outbound Java request to an attacker-controlled command and control server to load a webshell on victim machines.

The remarkable aspect here is how the exploit was disseminated. Oracle made a public post with IOCs, a PoC was posted on October 3, and according to CrowdStrike, threat actors under the ShinyHunters moniker posted an exploit file to their main Telegram channel.

Red Hat Consulting breach puts over 5000 high profile enterprise customers at risk — in detail by Kevin Beaumont

Red Hat Consulting, the technology services arm of Red Hat, allegedly suffered a data breach from a threat actor group dubbed “Crimson Collective.” It’s unclear how this breach happened, but they began posting screenshots of the pilfered victim data. Beaumont uncovered some interesting details about this threat actor group, thanks to the assistance of Brian Krebs. They seem to overlap with Scattered Spider/Shiny Hunters, and one of the Telegram posts made by the group had a “Miku” signature at the end. Miku is an alleged member of Scattered Spider and was arrested last year, but is on house arrest.

The victim details were posted on the Scattered LAPSUS$ Hunters victim leak site, and it appears to contain a trove of customer data from Red Hat Consulting, including some sensitive information.

DPRK IT Workers: Inside North Korea’s Crypto Laundering Network by Chainalysis

My favorite thing about reading Chainalysis blogs is getting a glimpse into how money laundering works at a cryptocurrency scale. Unless you’re a freak of nature and read indictments or court documents with detailed notes on traditional money laundering techniques, it’s rare to see how criminal and nation-state operations do the hard work of funneling money.

So, in this blog, the Chainalysis team studied the tactics, techniques and procedures of DPRK IT Worker laundering. They have a structured approach to taking payment in stablecoins, laundering it to a “consolidation” worker, and eventually offloading the consolidated funds to fiat.

Don’t Sweat the *Fix Techniques by Tyler Bohlmann

When I first read about ClickFix, I didn’t think it would be a successful approach to infection and initial access. The premise was a bit crazy: you funnel victims to a website, socially engineer them to believe there’s a problem with their computer, and convince them to willingly copy and paste a malicious command into their terminal.

Well I was wrong; this technique works beautifully, and according to Bohlmann, Huntress has observed a 600%+ increase in these styles of attack since their inception last year. In this post, they review the different styles of ClickFix, the attack chains and how they use clever ways to trick users to running the malicious payloads.

🔗 Open Source

Sprooten’s FlipSwitch PoC repo is referenced in the Gem above. It does more than just demonstrate the technique; you can use this as a rootkit kernel module in the latest versions of the Linux Kernel, and it supports some fun obfuscation techniques to make it harder to find.

Threat intelligence report to Sigma rule generator. This repository is based on the research linked above by Consonni. It looks pretty easy to use a templated CrewAI application, add knowledge files like detection rules as examples, and it looks like a SQLite database for RAG components.

matt-snyder-stuff/Security-Maturity-Tracking

Simple yet effective security maturity tracking framework for a security operations program. The repository lists each capability you want to track, such as SIEM, Threat Hunting and Threat Intelligence, and you can create maturity matrices for each one and track progress. These are generally pretty good at presenting up to leadership on program development.

Open-source anti-phishing and investigation application for investigators, analysts and CERT folks. You set it up, tie it to an inbox, have users forward suspicious emails to it, and it’ll pull apart the email, perform threat intel lookups and present a report for further analysis.

A dynamic malware analysis platform where you can build malware processing backends all in Python. It comes with several backends out of the box, including a malware sandbox, an archive extractor, and a malware configuration extractor. It looks pretty easy to write your own, and you can submit it via an API or the dashboard to extend functionality.

Great edition covering some critical developments. The FlipSwitch technique is fascinating - it's a reminder that kernel protections can inadvertently open new attack vectors when trying to close old ones. The fact that a single protection (making sys_call_table const) killed Diamorphine but enabled opcode-based hooking shows how defense in depth remains essential. The CrowdStrike research on CVE-2025-61882 is particularly concerning given how quickly the exploit disseminated through ShinyHunters' Telegram channel. The timeline from Oracle's public post (Oct 3) to widespread exploitation is remarkably compressed. One thing that stikes me about the LLM detection rule generation posts is the emphasis on evaluation frameworks - Sublime's ADÉ evaluation using precision, robustness, and cost metrics feels like it's setting a standard for the industry. The pyramid of pain penalty for IP-based matching is clever. I'd be interested to see how these frameworks perform against adversarial inputs designed to produce plausible but ineffective rules.

Amazing!