DEW #129 - Malicious browser extensions, npm gets pwned (again) and AI weaponizing CVEs

At least they had 2FA right?? right??????

Welcome to Issue #129 of Detection Engineering Weekly!

I’m in NYC this week, and I underpacked, so I walked over to Hudson Yards to grab some T-shirts. I picked out two from Uniqlo, and when I got to self-checkout, I looked like a confused tourist, since you just “drop” your shirts into a bucket and it automatically finds the shirt and price. It was black magic

It’s been a busy few weeks at work with all of these supply chain-style attacks, and I’m sure a lot of you have been as well. But, I am continuously underwhelmed that these elegant package takeovers result in cryptominers and wallet stealers. If anyone wants to turn heel with me and go on a villain arc, the first thing I’d recommend is to stay away from cryptominers

I’ve begun to make small structural changes to the newsletter issues. I am removing italics, I changed how my From field looks on emails, and titles have a more descriptive sneak peek into the content. Don’t worry, I’m still keeping the snark and xkcd-style commentary throughout the issue, but this has already helped boost my open rates and engagement

📣📰🌐 Interested in sponsoring the newsletter and placing your ad right here?

I’m happy to see the engagement of folks reaching out to sponsor the newsletter. I have slots filling up for the rest of the year, so if you want to run an ad and get eyeballs and clicks from practitioners, CISOs and everything in between, shoot me an e-mail and let’s chat.

⏪ Did you miss the previous issues? I'm sure you wouldn't, but JUST in case:

💎 Detection Engineering Gem 💎

Even if many plugins are fine, the bad ones are BAD by John Tuckner

As readers have seen in last week's issue, supply chain security affects the entire open-source ecosystem, which includes numerous registry-style marketplaces. For this post, a friend of the newsletter and security researcher John Tuckner shares a more in-depth look at how browsers manage the supply chain of extensions, and how we have a long way to go before we have complete visibility and detection opportunities on malicious extensions.

Unlike npm or pip, all modern browsers employ a sandbox that includes various security features. Memory protection, file system restriction, and process isolation are just a few of the many features of the sandbox, so it's tough for exploit developers to break out of the sandbox. But, browsers need to be modern, and any modern technology can extend its functionality, so this is where extensions come in.

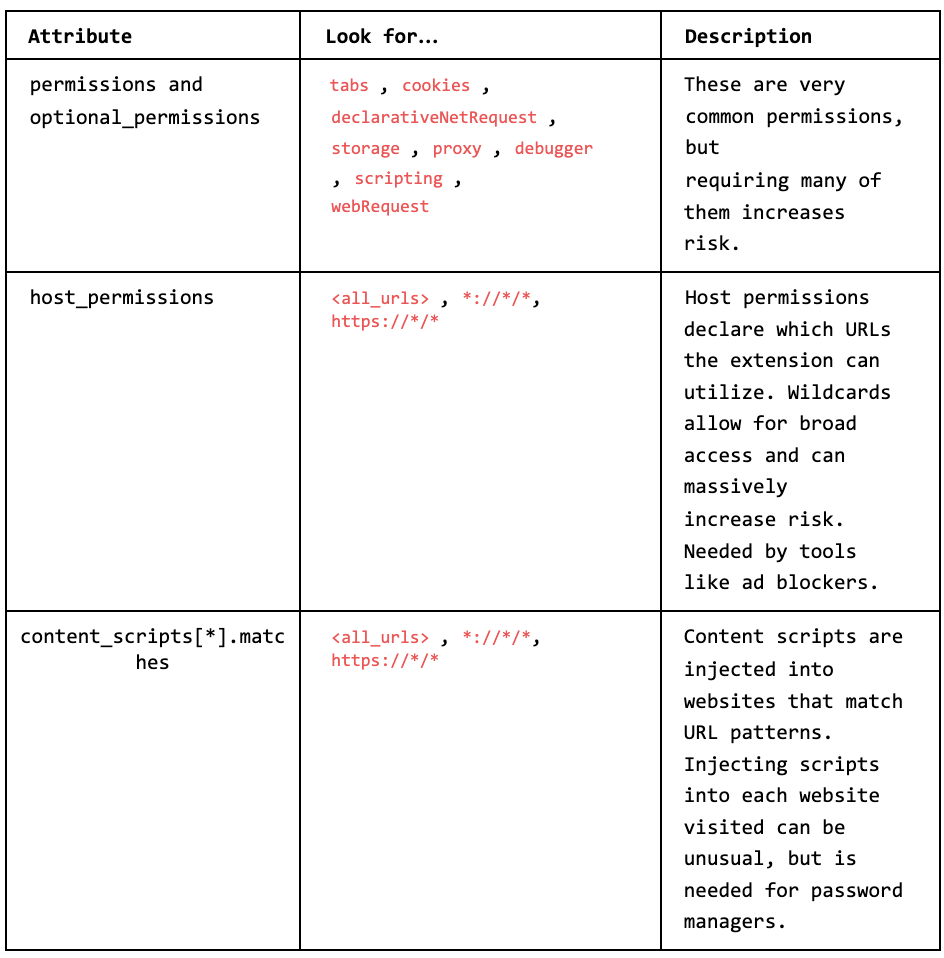

Extensions have marketplaces, and the browsers can either officially own these marketplaces or have them as third-party registries. Anyone can write an extension and publish it, and while some marketplaces have more stringent requirements, according to Tuckner, you can side-load them just like a mobile app. This opens up a significant risk, and if you aren't careful, you can install an overly permissive app that can read and write to your computer in ways you may not want an app to do.

It's great that these guardrails help prevent a full breakout from an extension to the operating system, but it doesn't stop someone from willingly installing a malicious one. The expectation that an end-user can read and understand permission models and assess their maliciousness across any registry, such as a browser, open-source software, or IDE, is an impossible task.

🔬 State of the Art

Can AI weaponize new CVEs in under 15 minutes? by Efi Weiss and Nahman Khayet

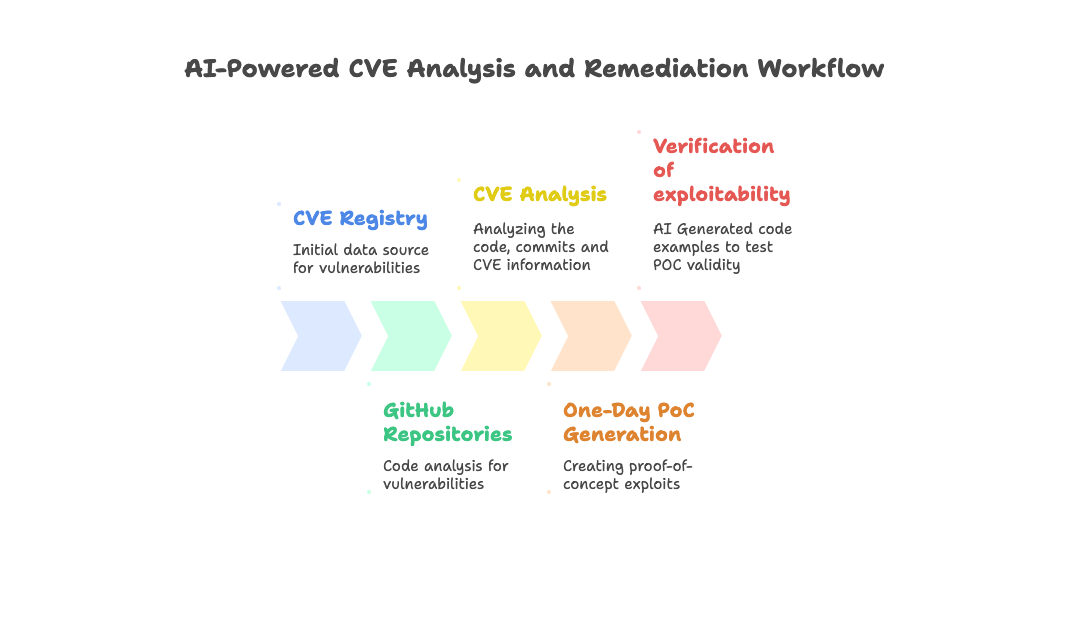

If you've ever wanted to see how to solve a security use case with agentic systems, this is an excellent post by Weiss and Khayet on how to build and deploy one. They started with a pain point that we all suffer from: given a CVE, how fast can a researcher create and publish a proof-of-concept (PoC) exploit, and should we patch if that code makes it to the wild? It's a pain when someone releases a PoC, but I think that it helps create detection opportunities to validate impact. So, whether it comes from a researcher or their LLM agent, I'm happy to take in more data. Here's their workflow:

They focused their research solely on open-source packages. They used a combination of NIST and GHSA, and this type of structured data, alongside the patch diff, is an excellent source of data to feed into an agentic system to generate the PoC. They encountered some issues along the way, such as using a single, generalized agent for the full PoC lifecycle instead of multiple specialist agents. The other part I found pretty funny was when their agent was "refining" the PoC; the LLM focused on making the code work rather than ensuring it was vulnerable.

If I had to suggest more research into this area, I'd love to see folks take the PoC environments from their GitHub and instrument them to create the correct logs to generate detection rules. The time from CVE publication to PoC to detection rule coverage would be lightning fast and help at least some of us sleep better at night.

Automation for Threat Detection Quality Assurance by Blake Hensley

So many people ask, "How many rules do you have?" and never ask, "How are your rules doing?" Jokes aside, detection quality is a topic that is near and dear to my heart, and it's not talked about enough. Hensley is breaking that barrier, and what I love about this post is that he argues that detection quality isn't always about rule formats, linting, and emulating in CI/CD pipelines. It's also about ensuring your rules perform as intended in your live environment through experiments.

Hensley structures this post with several examples of detection quality assurance tests. You have unit tests and purple teaming emulation within the mix, but there are some really unique tests here I've never considered before. For example, Hensley's "results diff backtest" compares the output of a previous rule version's results with those of the new version. All super clever, and I'm adding this to my detection backlog.

The Present and Future of Managed Detection and Response by Migjen Hakaj and Amine Besson

This post offers a thorough overview of the Managed Detection & Response (MDR) market, aiming to address and answer the question, "What's next?" for companies in this space. MDRs evolved from the MSSP space, where most of their value proposition revolved around being "alert forwarders", as Besson and Hakaj put it. The market MDR providers discovered involved taking sprawling detection toolsets, unifying them, providing expert analysis, and then presenting the key information that matters to customers.

You can probably see why I liked this post, starting at the section …And what is my MDR doing to improve it? The differentiator, according to Hakaj and Besson, is detection engineering. Scaling detections as more data sources are added to a technology stack becomes the moat. Adding AI on top of this will disrupt the scaling efforts, for good or for worse. This is especially interesting if MDRs hire expert analysts, while their customers hire security generalists or engineers.

So, if you do partner with an MDR provider, press them on detection coverage and adding sources, while proving to you that they can be nimble in both areas.

Building a Detection Lab That Fits in Your Laptop by Joseph Gitonga

This is an excellent home lab tutorial for folks who want to get started with detection engineering and threat hunting. Gitonga approaches this lab targeting individuals who want to break into security operations without incurring expenses on cloud-based lab environments. You may need a lab machine that can be cost-prohibitive, but you can find beefy servers on eBay or build something with parts to meet the minimum lab machine requirement here. By the end of the lab, you'll have a Splunk SIEM, Active Directory environment, and a separate Splunk response and automation server.

The Cost of a Wrong Word in Threat Intelligence by Rishika Desai

I recently had a sit-down with a senior leader in my company to discuss how I can assist them with security challenges. This person has been with my company for years, both before and after the IPO, and is now leading a massive engineering organization. Whenever I meet with folks I don't work with often, my number one rule is to ask lots of questions. So I asked: Where has security given you the most pain? And he described threat intelligence in one request, as he wanted the risk context for what he was building, so he could do it securely.

Risk context is a much better name for threat intelligence. It's supposed to inform; whether you are a detection engineer building rules or a CISO looking at the latest threats, you can choose to use the information or not. Desai examines this concept, but from the perspective that the information may be incorrect. A missing word, clobbered threat actor names, or overly confident language can make or break a threat intelligence report.

I love this blog because it highlights the importance of risk context, and the context can be screwed up if you don't effectively communicate in your writing. This same context applies to detection rules, whether in the documentation or your response playbooks. Accuracy and clarity matter, and that includes whether or not you don't know something as much as what you know.

☣️ Threat Landscape

Geedge & MESA Leak: Analyzing the Great Firewall’s Largest Document Leak by Mingshi Wu

The big news this week from the People's Republic of China (PRC) is one of the largest document leaks related to the Great Firewall of China. I love leaks like this for several reasons. One, it gives insight into a culture that is vastly different from what we are used to in the West. Two, we get to see the technical implementations and architecture of a serious Internet censorship apparatus. If you set aside ethics, it's an impressive feat for a country with the world's largest population.

Wu is updating this page in real-time with findings, so it should update automatically as time passes. They also link net4people's GitHub issue tracker as they comb through the nearly 500 GB of data from GitLab, Confluence, and JIRA, so expect numerous findings in the coming weeks.

Inboxfuscation: Because Rules Are Meant to Be Broken by Andi Ahmeti

Permiso researcher Andi Ahmeti releases a Microsoft Exchange Inbox malicious rule creator and analyzer based on their research into threat actors abusing Inbox rules. Whenever I meet people in my day job who worry about advanced attackers using advanced techniques, I try to ground them back in situations like Ahmeti describes in this post. You may be a victim of a highly sophisticated adversary, but they would prefer to use tradecraft that is simple before resorting to their Rolodex of advanced attacks.

The most interesting aspect of this research is the exploration of Unicode obfuscation techniques and their associated detection opportunities. It reminds me a lot of malicious domain research, where using different character sets can confuse a rendering application (such as email) and not display the Punycode representation, thereby confusing the victim into thinking they received a legitimate domain to click on.

Uncloaking VoidProxy: a Novel and Evasive Phishing-as-a-Service Framework | Okta Security by Houssem Eddine Bordjiba

The Okta Security Research team uncovered a new phishing-as-a-service kit dubbed VoidProxy. The campaign they uncovered started from phishing lures from compromised email addresses on marketing platforms. The level of indirection in this particular kit is impressive. Particularly, they put a lot of time and energy into catching security scanners and researchers. They abuse Cloudflare's CAPTCHA turnstile and edge workers infrastructure to funnel potential victims into the phishing attack itself. It uses a standard Attacker-in-the-middle workflow to capture non-phishing-resistant authentication codes for the attack.

Bordjiba gained access to the panel itself, providing a unique inside look at how these kits are constructed, distributed, and managed by threat actors.

Ongoing Supply Chain Attack Targets CrowdStrike npm Packages by Kush Pandya and Peter van der Zee

The news of the npm supply chain attack from last week continues, this time targeting CrowdStrike's npm package library. The Dune-themed attack has a rather unique attack chain. Once you install the backdoored package, it'll download Trufflehog and extract secrets on your local machine or CI/CD environment. It also backdoors GitHub actions workflows with a file named shai-halud-workflow.yml. The peculiar part here is that the attacker publishes a public repository named Shai-Halud, which can be searched for across GitHub. Still, no one has (as of this post) figured out what these repositories do.

🔗 Open Source

Permiso-io-tools/Inboxfuscation

Powershell-based Microsoft Exchange rule exploitation toolkit that I linked above under Threat Landscape. It does some really neat stuff with Unicode manipulation to defeat traditional regular expression and word-based detections.

Splunk and Active Directory repository referenced in the lab post above by Joseph Gitonga. Has a ton of out-of-the-box logging capabilities with Sysmon, PowerShell logging, and Audit policies. Also ships with some useful threat emulation tools, including Atomic Red Team, Disabled Defender, and fake users.

KQL testing repo from Blake Hensley's detection quality assurance piece under State of the Art. You provide it with a rule and your Azure credentials, and it'll perform a series of checks referenced in the blog to verify the efficacy against live data. You can adjust the query parameters or testing logic for your own detection risk tolerance.

Sneaky remap is a Linux defensive evasion technique that hides shared object files from detection. These shared object files can be used for persistence techniques, privilege escalation, and other defensive evasion operations, so relying on the Linux filesystem commands to look for peculiar shared objects can stop things like LD_PRELOAD and others in its tracks. The theory section here explains the algorithm itself, which relies on taking file-backed memory mappings, preserving their permissions, and then moving them to anonymous memory to evade detection.

This tool utilizes the cURL library to simulate modern browsers by replicating their TLS and HTTP handshake techniques. This differs from basic techniques like setting User-Agents or emulating a browser, as it relies on manipulating packets over the wire (Layer 7 HTTP handshakes or Layer 6 for TLS), which are library- or application-specific.

and of course the one time I didnt do a full read of the newsletter/send it to my "dont make stupid mistakes" GPT , i make a stupid mistake and repeat an entry