Det. Eng. Weekly #122 - I stare at alerts like an iPad kid

If I do the silent stare long enough, maybe they'll go away

Welcome to Issue #122 of Detection Engineering Weekly!

Lately, I’ve been thinking about the concept of self-compassion and giving myself a break on certain things. It’s an interesting approach to not only being easier on yourself, but also on others. Headspace has some great meditations on this subject, but YouTube has a bunch of free meditations too.

I feel like preventing burnout with some self-compassion can help a lot of us in the daily grind of security.

Ok enough me talk, let’s get right into it.

⏪ Did you miss the previous issues? I'm sure you wouldn't, but JUST in case:

💎 Detection Engineering Gem 💎

Scaling Netflix's threat detection pipelines without streaming by Zach Wilson

Security at Netflix in the 2010s seemed like a mystical, mad scientist place to work. I still remember using parts of Security Monkey, Scumblr and Sketchy for detection and intelligence work and being in awe of the creativity behind each tool. I was delighted to see the DataEngineer Substack author write a post on how Netflix built its threat detection pipeline in 2018.

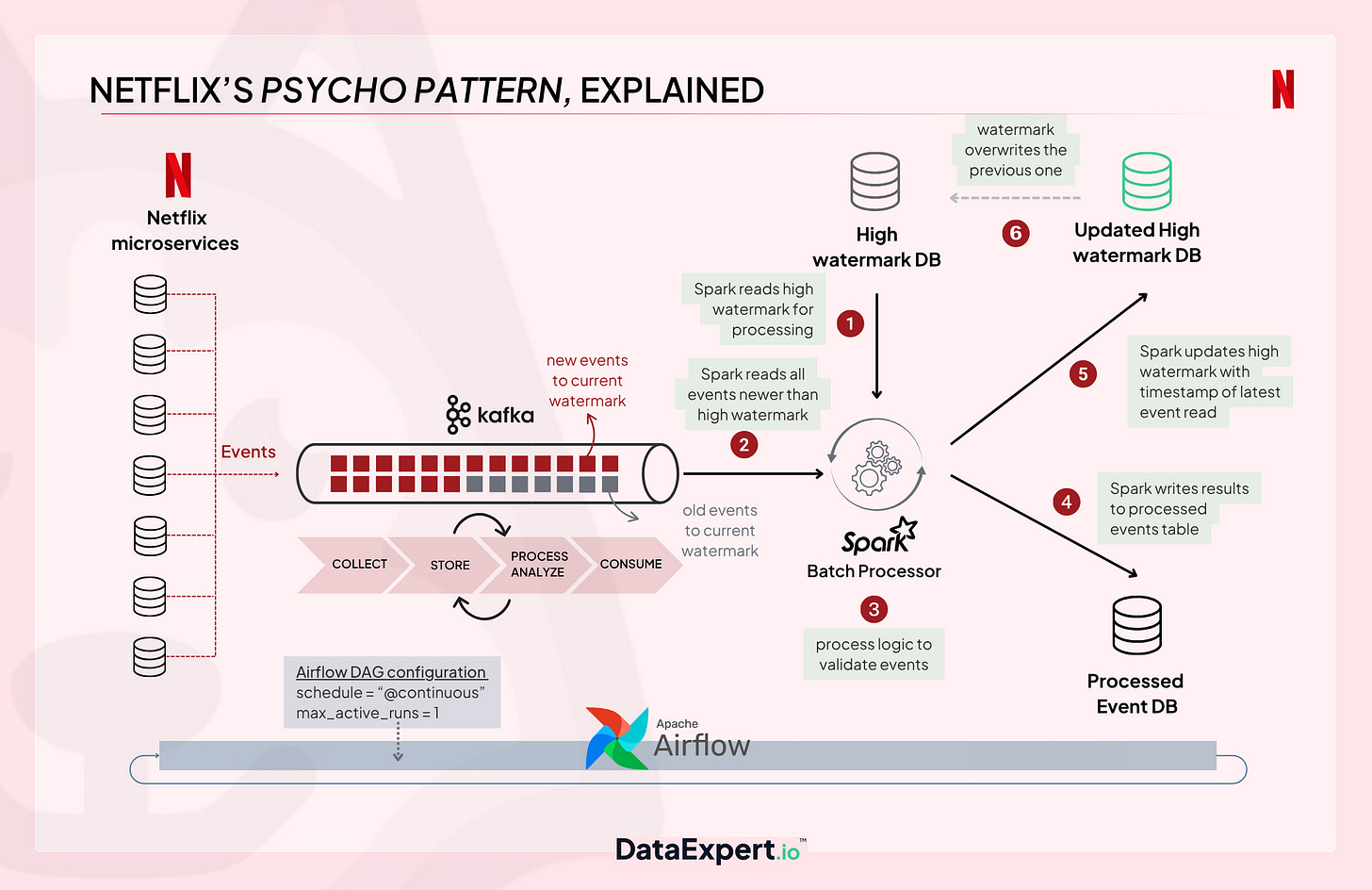

The architecture centered on reading data from SQS and Kafka, processing it using machine learning, and outputting alerts and signals into Netflix’s Security Operations. The most remarkable aspect, in my opinion, is the experimental nature of the data engineering team's approach to this. No SIEM, no rules, lots of machine learning and a continuously running Spark batch pipeline. They called this the Psycho Pattern:

I usually read posts like this and take lots of notes on the architecture and design from others so I can implement them at $DAYJOB. However, amusingly, this thing was aptly named and sounded quite chaotic, yet it didn’t really solve their issues.

The basic premise behind this pattern is that you maintain a message queue to read off logs and telemetry. You retain the position of this queue via a watermark table, which stores the index of the highest message, and you begin popping off and processing the logs inside the Spark job until you run out of messages. Once finished, you update the high-watermark index and move on.

The problem with this approach, according to Wilson, was that the singular job architecture can cause cascading failures if the Spark job fails. For example, you read 100 new logs and begin the batch processing job. This processing times out, or runs out of memory, or loses connection to other external services.

It’s rare to read posts on what hasn’t worked, or what failed, since we see so many success stories. We have an adage in Brazilian Jiu-Jitsu, “You either win or you learn,” and I think that applies here nicely. I wouldn’t be close to where I am today without constant failure, since that breeds learning.

🔬 State of the Art

The Agentic Threat Hunter by Sydney Marrone

Threat hunting G.O.A.T. Sydney Marrone visits a topic that many security practitioners are either all-in or all-out about: agentic security. I believe that over the next few years, more of us will transition to being a “team agent.” However, without solid use cases and with a significant amount of marketing, we need more blog posts like that that showcase what actually works in our day-to-day operations.

Threat Hunting is a manual process by design, as it requires extensive planning and exploratory analysis. We aren’t necessarily upset when nothing useful comes out of a threat hunt, and don’t mind lots of false positives since we are looking for needles in lots of haystacks. To be honest, this is the perfect use case for LLMs and Agentic Security.

Tuning Detections isn’t Hard Unless You Make it Hard by Ryan G. Cox

I see many blogs that discuss tuning and its importance, but this is the first blog I’ve read that clearly outlines the situations where tuning is necessary and the type of tuning that goes into the practice. The best indicator of your detections working is how they are failing. Whether it’s you generate a bunch of false positives with a “bad” rule, or you have a false negative detection from the red team because you messed up a field mapping, failure points to an area of improvement. So why not embrace it?

I love the amount of situations and tuning styles Cox goes into in the post. Logic errors, poor documentation, incorrect thresholds, and not understanding your environment are all part of what makes this field interesting and challenging, so never turn down an opportunity to tune a rule!

Detection-as-Code: Building Systems, Not Just Rules by Harsh Mehta

I haven’t seen a systems thinking approach in a blog to detection-as-code before, so when I saw the title of this blog, my ears pricked up. In this post, Mehta introduces the concept of detection-as-code, but tries to take a step back to help readers understand that doing this practice is more than just keeping rules inside version control, it enables you to control the chaos of security operations.

The sample logs, code and rules make this piece an actionable read versus a theoretical one. Mehta starts with the Splunk rule, adds automation to create a saved search in Splunk, and then integrates the rule into a CI/CD platform with testing and GitHub actions.

On Confidence by Richard Ackroyd

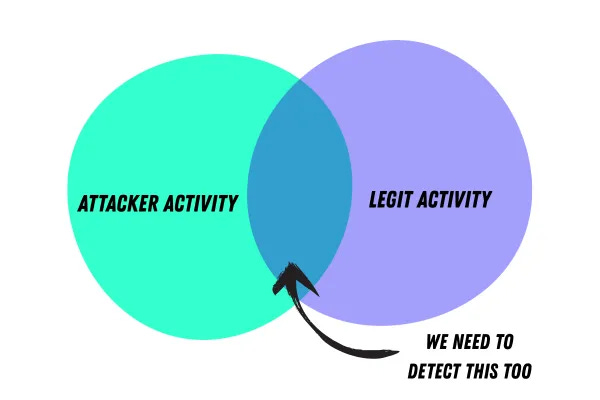

Detection Engineering operates in ambiguity since it’s essentially a labeling exercise. Given a queue of alerts with metadata associated with it, an analyst pops the alert off the queue and inspects it. The analyst asks the question: Is it a True or False positive? They apply a label using their best judgment, then have a certain number of actions afterward, depending on the label.

This becomes challenging due to context switching, poor documentation, and reliance on tribal knowledge. Detection Engineers generally use severity to determine the seriousness of an alert. But, in this post, Ackroyd asserts that severity is not enough and introduces the concept of Confidence. I’ve mostly seen confidence intervals used in machine learning purposes, such as a machine learning algorithm labeling a piece of malware with a confidence of 0-100 to “rate” its analysis.

Ackroyd presents some options on how we could utilize confidence in our detections, noting that confidence may be interpreted differently depending on who is triaging the alert. For example, a Critical severity alert with a low confidence score. This could indicate the false positive-y nature of the alert to an analyst, but it can also suggest that it’s a weird signal by a threat hunter.

They then propose a new type of alert metadata called “Alert Priority,” which captures more than just severity, and could be an interesting piece of information for analysts and hunters to review during their analyses.

Detecting ADCS Privilege Escalation by Alyssa Snow

This is a fun lab-style post on detecting privilege escalation techniques in Windows Active Directory Certificate Services (ADCS). Snow sets up a test environment, enables the correct logging configuration to detect the privilege escalation, runs the attack and shows how you can write the KQL query to find the attack. For threat emulation, Snow uses certipy, and when the environment is configured correctly and you look for the correct eventIDs, you can see the alert in Sentinel on the other side of the experiment.

☣️ Threat Landscape

SharePoint ToolShell | Zero-Day Exploited in-the-Wild Targets Enterprise Servers by Simon Kenin, Jim Walter & Tom Hegel

As a follow-on to last week’s issue with the tagline “lol sharepoint”, SentinelOne researchers disclose three distinct, advanced campaigns leveraging the vulnerability to gain access to victim machines. Vulnerability exploitation of this magnitude generally come in two waves: a slow-drip, advanced wave typically done by sophisticated actors, and the next wave done by mass scanners. It was cool to see how post-exploitation works on SharePoint, and the researchers captured several webshell-ish samples to share with the community.

Allianz Life says majority of customers' data stolen in hack by Harshita Meenaktshi (Reuters)

Insurance behemoth Allianz disclosed a customer data breach where threat actors exfiltrated data on almost all of their customers, employees and “financial professionals.” In their disclosure, Allianz said they notified the FBI, so hopefully the feds find the culprits, and maybe (just maybe) we get some better outcomes on how companies like this protect our personal data.

An important update (and apology) on our PoisonSeed blog by Expel Threat Intelligence

Expel Threat Intel released a transparency-style blog about a recent campaign they published on their website. It is serving as a formal apology and retraction of some of the claims in a phishing-related post on PoisonSeed. TBH, it’s great seeing companies correct the record to showcase their analytical rigor, instead of shrugging it off and moving on.

Arizona Woman Sentenced for $17M Information Technology Worker Fraud Scheme that Generated Revenue for North Korea by U.S. Department of Justice

Christine Chapman, the DPRK asset that ran a large laptop farm for fake IT workers, was sentenced after her arrest a few months ago. Her impact was quite impressive: she helped DPRK fraudulently employ work at over 300 U.S. companies and generated over $17 million dollars in revenue to funnel back to the Motherland. They managed to steal 68 identities to perform this work, which makes it harder and harder to catch. The laptop farm pictures are pretty insane:

Scavenger Malware Distributed via eslint-config-prettier NPM Package Supply Chain Compromise by Cedric Brisson

Scavenger is a new strain of malware that’s been being found in backdoored NPM packages from compromised package maintainers. It has an impressive list of anti-EDR techniques once the victim installs the malicious package and downloads the second-stage malware. It’s the first malware family where I’ve seen threat researchers, like Brisson, find the phishing page leading to the compromise. NPM sets a hard 2FA requirement for maintainers of large packages, so I wonder if eslint-config-prettier did not meet that bar.

🔗 Open Source

I’ve been all-in on Cursor lately, and one of the coolest ways to configure it is via Cursor rules. These are essentially prompts loaded into a project you are working on, and it’ll train the Cursor models to follow the rules you set. If you can think of ways to write a prompt with instructions on how to draft or tune detection rules, this is a great spot to try.

This repo was originally opencode and moved to crush. It is an open-source Windsurf/Cursor competitor, and looks SO good. I’ll have to play with this and Claude to see how it compares. Again, write some rules or generate some logs!

vulncheck-oss/0day.today.archive

The good folks at VulnCheck cloned as much as they could off of 0day[.]today, a legendary website that held publicly available PoC exploits and shellcode. They got what they can from the Internet Archive, but it looks like 0day did a lot of work to prevent bot scraping, so much of the data was lost.

A GitHub org that holds repos for an open-source project, DFIR-IRIS, which is an open-source platform for security incident response. There are all kinds of goodies ranging from the platform itself to the specific modules you can load into DFIR-IRIS for additional enrichment.

An Active Directory enumeration and abuse tool, all written in Python. This tool was used for threat emulation in Snow’s post above in State of the Art.