DEW #137 - AI Agents For Security By Security, Free Sigma training & JA4 for beginners

The Louvre's WiFi password should've been ervouL

Welcome to Issue #137 of Detection Engineering Weekly!

✍️ Musings from the life of Zack in the last week:

I was in LA for a wedding and went to Venice Beach for the first time. It was awesome seeing pros at the skatepark, jamskaters, live music, and of course, this ^^ MF DOOM mural

Speaking of LA, there are Waymos EVERYWHERE

It started snowing here in New England, and we celebrated by running outside barefoot for as long as my family could bare it

This Week’s Sponsor: Nebulock

Trust Your Intuition. Vibe Hunt for Outcomes.

Good hunters feel suspicious activity before the alert ever hits. Vibe Hunting allows you to lean into that intuition and combine it with machine reasoning to hunt across data and telemetry without juggling tools. Nebulock’s threat hunting agents connect the dots, explain reasoning, and deliver contextual recommendations.

Hunting becomes less about process and more about bridging hypotheses with detection.

💎 Detection Engineering Gem 💎

How Google Does It: Building AI agents for cybersecurity and defense by Anton Chuvakin and Dominik Swierad

I typically avoid including blogs from vendors that are high level concepts around complicated topics like security and AI. But, this blog struck a great balance between how they approached internal Google security engineers who were skeptic of leveraging AI in their day-to-day work. I think this approach can be copied for any security organization looking to augment their security operations with LLMs, as it focuses on small achievable wins grounded in risk reduction and reality versus “thinking big.”

Chuvakin and Swierad split this approach up into four steps:

Hands-on learning builds trust: You wouldn’t want to purchase a SIEM without having your Detection & Response team understand how to use it, so why do the same thing with agentic systems?

Prioritize real problems, not just possibilities: Ground your agentic problems in a space where you are already familiar with the problems. They list two prime examples every D&R engineer could use to help with: analyzing large swaths of security data into insights, and quickly triaging malicious code to understand its function

Measure, evaluate, and iterate to scale sucessfully: This section uses the dirty word/acronym “KPI” (cringes in business school). Instead, they gut-check success by asking two critical questions: “Did this meaningfully reduce risk?” and “What amount of repetitive tasks did this automate and free up capacity?”

Get your foundations right: This is the most nuanced section that carries the most value for folks to steal. When you develop agentic systems, stick to simplicity on the particular task you need the agent to do. Agents aren’t security engineers, they are containerized experts in a small subset of tasks. Ensure they are proficient in these tasks, because what makes them powerful is how you connect them together.

The way I see this working for years to come is that we’ll have agentic workflows handle the “80%” work, such as repetitive tasks or analysis. The “20%” work that requires a ton of focus will be traditional expert work that we know and love. This split still requires us to have deep expertise in our field, but I worry about the value of learning from the more boring or tedious work.

🔬 State of the Art

Detection Stream Sigma Training Playground by Kostas Tsialemis

Tsialemis, a long-time contributor to the detection engineering research space and a multi-time featured author on this newsletter, just published a free Sigma training playground for detection engineers. His associated blog post goes over the platform in detail, but it’s like a CTF for writing rules. There are some cool features which include interactive challenges, responsive feedback to the challenges, and the ability to write your own challenges and contribute them to the community.

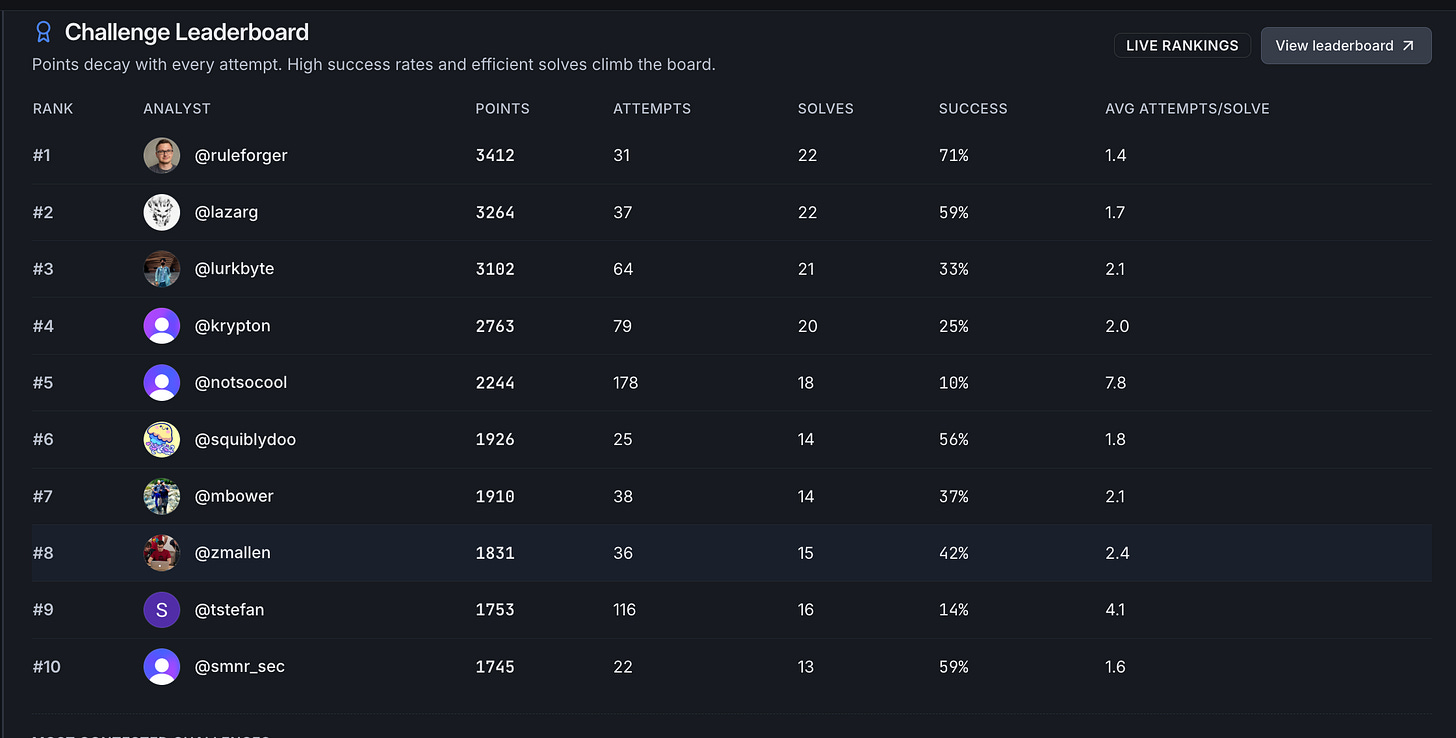

A leaderboard always motivates me, too. #8 as of 10 November!

Mistrusted Advisor: Evading Detection with Public S3 Buckets and Potential Data Exfiltration in AWS by Jason Kao

Trusted Advisor is a free service from AWS that helps scan customer infrastructure for misconfigured security and resilience resources. One resource it helps find misconfigurations for is in S3 buckets, which have led to massive security incidents and breaches like those at Capital One and Twitch. So, if you can find a 0-day bypass to a security system like this, it can give an attacker the ability to evade defenses in your cloud accounts. And it appears that is what Kao and the Fog Security team did.

The basic premise behind this attack is setting an insecure policy that would generate an alert from Trusted Advisor, but explicitly denies three actions Trusted Advisor uses for the check.

So the insecure policy statement are lines 4-10, while the bypass occurs in a separate statement on lines 11-17. As it turns out, even AWS can get IAM wrong! Basically, the check failed close here and reported nothing was wrong, where the behavior should be failed open in cases where it can’t receive the telemetry to make an assessment.

The team submitted the security disclosure to AWS, and they fixed it after two tries. It also looks like Fog Security wasn’t happy with how AWS’ publicly disclosed the issue, as it contained an inaccuracy in a non-existent action that the hyperscaler fixed.

All you need to know about JA3 & JA4 Fingerprints (and how to collect them) by Gabriel Alves

This piece is an easy-to-understand introduction to the powerful TLS fingerprinting algorithms, JA3 & JA4. With TLS everywhere, the underlying Application Layer traffic has become much harder to analyze for potential security indicators. You could set up TLS termination, but there’s a large cost associated with building that infrastructure, and decrypting and inspecting traffic also leads to compliance issues.

The JA* algorithms solve this by building fingerprints of the unique characteristics of TLS handshakes. Virtually every implementation of TLS in code has its own quirks and intricacies that make it unique. When you add more infrastructure on top of that, it can be a powerful tool to cluster traffic in ways to identify malware families, hosting infrastructure or bots.

Alves provides readers with some great visuals to understand these unique fingerprints and utilizes the most powerful security tool in existence, Wireshark, to do so.

Agentic Detection Creation: From Sigma to Splunk Rules (or any platform) by Burak Karaduman

I’m seeing more blog posts leveraging agentic workflow platforms to build detection content, and I’m all for it. At this point in our journey in detection engineering, I don’t see why you wouldn’t have agentic rule writing to assist you. Here’s why:

MITRE ATT&CK serves as a rich knowledge base of tradecraft references that we all fundamentally agree is the standard

Telemetry sources are well documented, and the startup cost of booting up an environment for testing is decreasing more and more

Threat intelligence companies and blogs help piece together attack chains that you can generalize

Sigma serves as a universal language that forces rule content structure and documentation, and has a rich library of converters to your SIEM of choice

Detection as code pipelines serve as a quality gate for human review and for testing

SIEM APIs have capabilities to ingest a candidate rule and make sure it’s valid in its native language

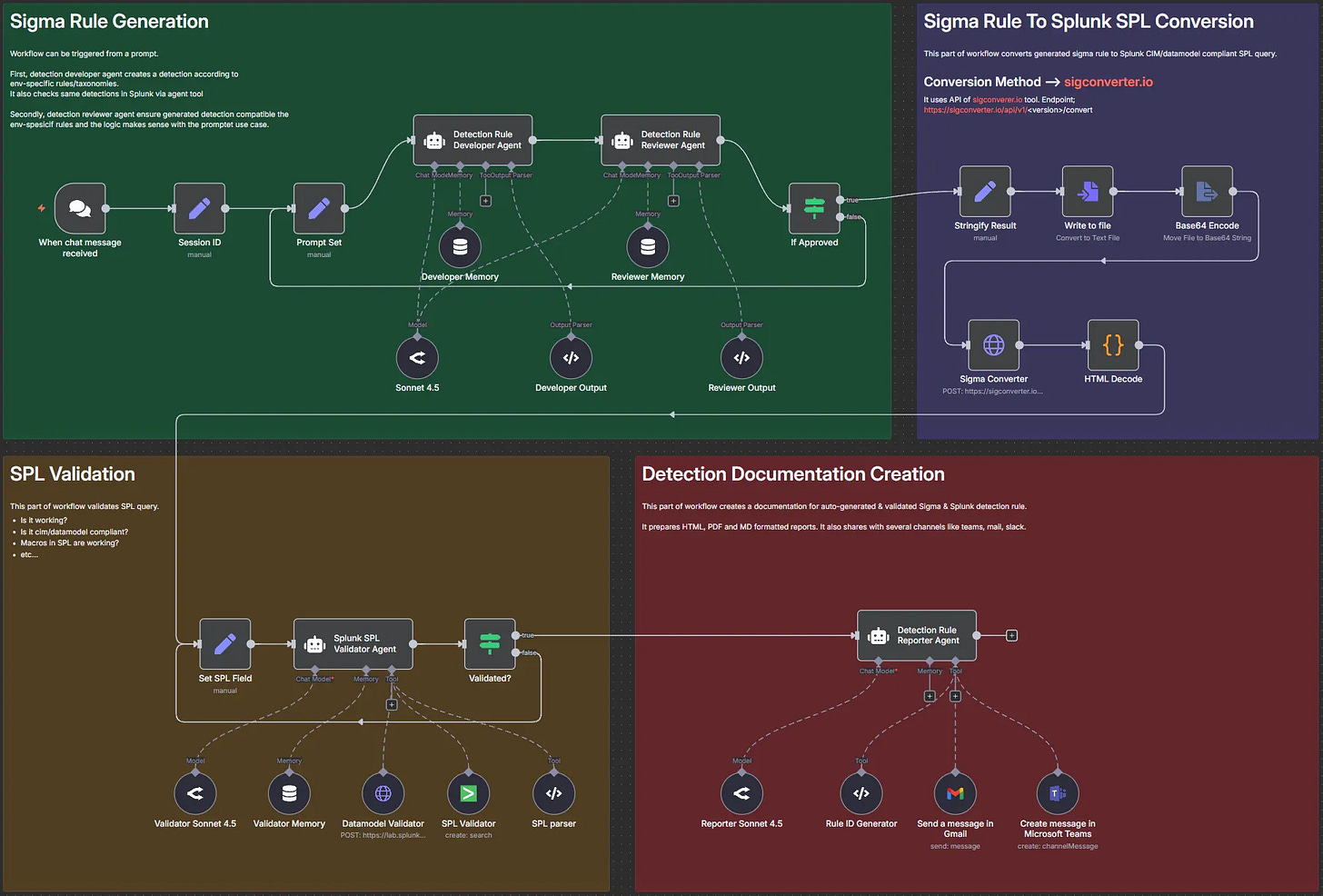

Karaduman’s approach here follows the pattern I listed above, and it’s functionally sound. It follows a lot of the fundamentals of the detection engineering lifecycle. The agents take ideation as an input, and continuously research, design, and validate candidate rules. Once the Sigma rule is created, Karaduman leverages sigconverter.io to translate the rule into SPL and has a separate SPL validation agent to make sure it can run in production.

It’s a clever setup with several “smaller” agents performing tasks, which looks to be the optimal setup for this agent-to-agent workflow. I’m impressed at the simplicity of their architecture, and they were kind enough to include the fully visualized n8n workflow for readers to experiment with.

Can you guess what the most crucial step is here? The red box of course! It compiles every piece of documentation in the rule, validates it against Claude’s Sonnet 4.5 model, generates a report and messages the hypothetical detection engineer in email and on Teams.

☣️ Threat Landscape

GTIG AI Threat Tracker: Advances in Threat Actor Usage of AI Tools by Google Threat Intelligence Group

Unlike the cyberslop post from last week, where researchers at MIT made some bold claims on AI usage by ransomware operators, Google’s intelligence group brings the receipts on threat actor usage of LLM tools during operations.

I quite like the coining of “just-in-time” malware leveraged by two families they track as PROMPTFLUX and PROMPTSTEAL. These both generate malicious code on demand, and it looks like a multi-agent step that creates the code and obfuscates it during malware execution.

U.S. Nationals Indicted for BlackCat Ransomware Attacks on Healthcare Organizations by Steve Alder

Two American security professionals were indicted for allegedly working as initial access brokers for BlackCat ransomware. This is a wild story: they both worked for a threat intelligence company named DigitalMint, conducting RANSOMWARE NEGOTIATIONS on behalf of victims. Talk about insider threat, right?

In a classic case of insider threat motives, the main conspirator was in debt and went into business with BlackCat to help relieve that debt. This is a common tactic employed by spy agencies, so, logically, it would also work for criminal gangs.

Ex-L3Harris Cyber Boss Pleads Guilty to Selling Trade Secrets to Russian Firm by Kim Zetter

Is it insider threat week? It feels like insider threat week. Zetter reports of a man who was arrested and found guilty via a plea deal for selling trade secrets to an “unnamed Russian software broker”. The accused worked for L3Harris Trenchant, a U.S.-based developer of zero-day and exploitation tools, and earned over seven figures in the process.

Interview with the Chollima V by Mauro Eldritch, Ulises, and Sofia Grimaldo

This series by the Bitso Quetzal team highlights their research (and shenanigans) with live interviewing DPRK IT Workers. The interesting part of this interview, and potentially a change in WageMole's TTPs, is that they are interviewing and recruiting collaborators to conduct interviews on behalf of WageMole. There were early reports of this happening, but Grimaldo, Ulises, and Eldritch brought receipts in the form of chat logs, Zoom screenshots, and LinkedIn profiles.

LANDFALL: New Commercial-Grade Android Spyware in Exploit Chain Targeting Samsung Devices by Unit 42

LANDFALL is a Samsung Android-based spyware family discovered by Unit 42 researchers. They found this family while hunting for exploit chains related to the DNG processing exploit that Apple disclosed earlier this year. DNG is a file format that both Android and iOS can process, and it’s within this processing logic that the vulnerability and subsequent exploit chain exist.

It’s pretty neat how the Unit 42 team came across this malicious file: they were hunting for DNGs to replicate the iOS exploit and found one that had a Zip file appended to it, but was exploiting Samsung’s recently patched vulnerability from earlier this year. The team pulled apart the malicious DNG, found two .so files and mapped out the command and control network associated with it.

🔗 Open Source

A Bash script for sending spam to WiFi-connected printers over LAN.

😭😭😭

MAD-CAT is a chaos engineering tool that implements data wiping and corruption attacks against databases to simulate database failures and data wiping-style attacks for detection engineers. It supports six database technologies: MongoDB, Elasticsearch, Cassandra, Redis, CouchDB, and Apache Hadoop.

JA4 TLS fingerprinting library referenced in Alves’ post above. I’ve linked JA4 before, but it’s a seriously effective tool to add to detection arsenals, especially if you can instrument it in publicly accessible servers.

A Windows minifilter driver that blocks filesystem access to specific file paths to prevent infostealers. The hardcoded paths it protects include browser secret data, cryptocurrency wallets and secrets, and chat applications.

Event Tracing for Windows (ETW) consumer that requests stack traces to leak Kernel addresses. This can help with exploit development if you need to exploit a Kernel vulnerability and require base addresses, potentially defeating ASLR.

It's interesting how you framed the Nebulock part. I really liked "Good hunters feel suspicious activity before the alert ever hits." Combining human intuition and machine reasoning is such a smart aproach for AI in security. Excellent insight!