DEW #135 - Chaos Detection Engineering, Connecting Policy to IR playbooks & Spooky AWS Policies

spooky scary detection rules keepin' me up at night

Welcome to Issue #135 of Detection Engineering Weekly!

✍️ Musings from the life of Zack in the last week

I’m helping host the second edition of Datadog Detect tomorrow! We have an excellent lineup with folks I’ve featured several times on this newsletter. It’s fully free, fully online, and also available on-demand. We have a small capture the flag afterward to win some socks.

👉 Register Here 👈 and don’t forget to meme out in the webinar chat like last time.

We had close to 1000 chatters so it felt like a Twitch stream

I’m all booked for London and got some excellent pub and restaurant recommendations. Please keep them coming :D

This Week’s Sponsor: detections.ai

Community Inspired. AI Enhanced. Better Detections.

detections.ai uses AI to transform threat intel into detection rules across any security platform. Join 9,000 detection engineers leveraging AI-powered detection engineering to stay ahead of attackers.

Our AI analyzes the latest CTI to create rules in SIGMA, SPL, YARA-L, KQL, and YARA and translates them into more languages. Community rules for PowerShell execution, lateral movement, service installations, and hundreds of threat scenarios.

Use invite code “DEW” to get started

💎 Detection Engineering Gem 💎

How to use chaos engineering in incident response by Kevin Low

Hey look, security steals SRE concepts again and it’s a beautiful thing! Jokes aside, this is a concept I’ve believed heavily in since I started working professionally with SRE organizations 10+ years ago. Chaos engineering is a practice that intentionally injects faults into a production system to test resiliency and build confidence in the face of resiliency failures. Basically, it challenges you to break something to see how fast you can react and recover to an outage, almost like intentionally popping a tire on your car to see how well you react and can change it.

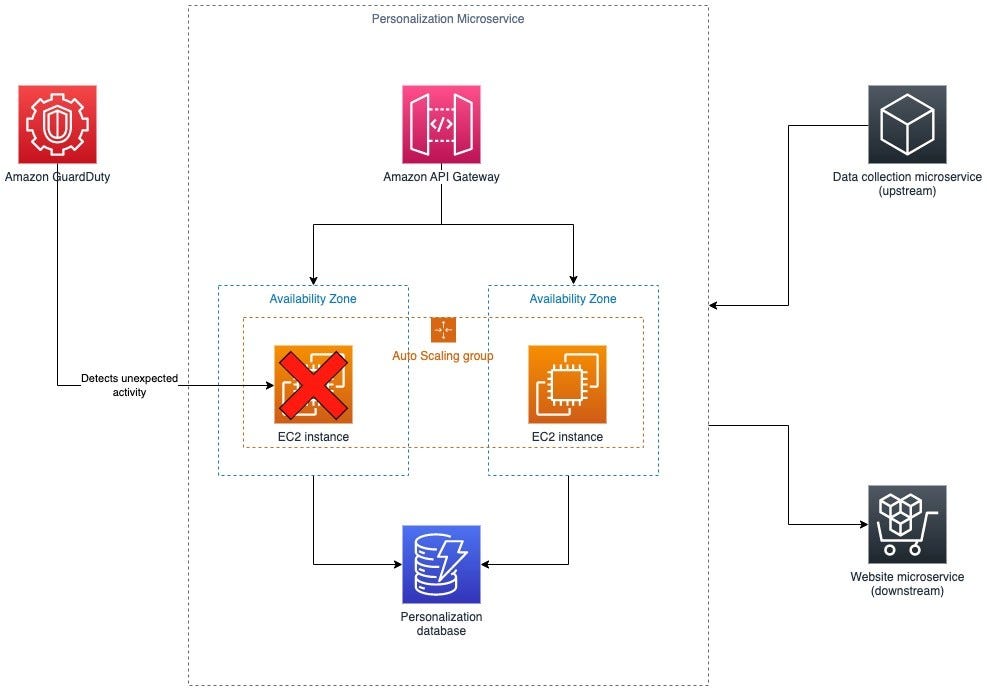

This seems applicable to security, no? That’s where Low’s post comes in to test the idea. First, Low makes a gentle introduction to the concept and then presents a test architecture and a threat model in an AWS environment to experiment with.

In this scenario, a microservice experiences some unexpected security activity and GuardDuty generates an alert. If you shut down an EC2 instance, what exactly happens? Enter Chaos Engineering!

There are five steps in a Chaos Engineering experiment: defining the steady state, generating a hypothesis, running the experiment, verifying the effects, and improving the system. This has a nice carryover for testing detections and their infrastructure in production states.

Steady State: What is our baseline for MTTR and MTTD? What is the general uptime of our log sources? What configurations are in place to prevent attack paths?

Hypothesis: When a workstation queries a known malicious domain, our SIEM will detect it within 15 minutes, notify the security team within 2 minutes, and the machine will be contained 1 minute after that

Running the experiment: Load a benign domain inside your threat intelligence look up tables, remotely connect to a machine and perform a DNS lookup for the benign domain.

Verifying the effects: Did we generate an alert in the SIEM? Was there a Slack notification to contain the host? Did it fall within our hypothesis’ parameters?

Improving the system: The Slack alert did not defang the domain, the containment tooling only blocked the domain and not the resolution IP

I love this approach, and I’m unsure whether any companies are considering this type of fault or “adversary injection”- style testing. Breach Attack Simulation products focus on coverage of rules, but I haven’t seen anyone think about this from a Detection & Response validation angle.

🔬 State of the Art

A Retrospective Survey of 2024/2025 Open Source Supply Chain Compromises by Filippo Valsorda

In this post, Valsorda performs a retrospective survey analysis of all open-source supply chain attacks from 2024 to 2025. At Datadog, we collect 100s to 1000s of these types of malicious packages to help defend our environment, but a supply chain compromise is more than just a malicious package. These last 3 months alone have had compromises that made mainstream news, such as Shai-Hulud and s1ngularity.

Valsorda grouped the root causes of 17 major attacks to help readers understand initial access and subsequent attack paths. Funny enough, phishing was the number one root cause of these package takeovers, and the number two was a new attack path I haven’t been able to put into words: control handoff. The basic premise behind control handoffs is that it’s part social engineering, and IMHO, part insider threat. For example, the infamous xzutils attack originated when a developer gradually added a backdoor to the library over time. The polyfill[.]io attack involved purchasing a domain that had expired and the new owner served malicious Javascript to victims.

It’s a fascinating read as a survey blog, but it highlights how fragile the open-source software ecosystem is. It’s unfair how large companies and organizations demand feature and security work from some of these projects without pay, and, understandably, burnout from these demands has become a real security issue once attackers exploit them.

Re-Writing the Playbook — A detection-driven approach to Incident Response by Regan Carey

Merging governance, risk and compliance documents and policies across an organization is difficult. I think the most salient example of incorporating a policy into practice is mandatory 2FA. You write a policy that mandates 2FA, perhaps based on a SOC2 or ISO27001 audit, and your IT team buys physical YubiKeys and Google Workspace to ensure that all authentication requires a USB-C dongle.

This gets harder and more nebulous in the threat detection space. 2FA is clean and measurable; you can pull reports of the number of employees enrolled in 2FA and drive it to completion. But, how do you drive a Ransomware Response Playbook into completion? Is it that you have a playbook? Is it that you have EDR tooling, plus a playbook? Or is it that you have a playbook, you have EDR tooling, and you have Bob from IT who presses a button when an EDR fires?

But what about individual rules that respond to ransomware? Are they firing accurately? Is the SPECIFIC response playbook inside the rule up to date? When do you know it's out of compliance with the overall playbook? I think the answer is: you don’t and you won’t. This is where Carey begins their exercise and proposes their Incident Response Diamond concept.

Translation and mutation of data can result in loss of specificity, which is no different from a data engineering pipeline problem. Data engineering solves this through meticulous field mapping and clear documentation. I think this is what the Diamond concept Carey is proposing here. Basically, they define a handoff between non-technical playbooks into rules, but they keep a lineage of how certain playbooks are invoked by rules so you know which policy it falls under.

I think this is a great approach, but it means your security response and GRC teams need lots of alignment to pull it off. Documentation is one of the hardest parts of security, and keeping rules up to date is already hard enough.

Fantastic AWS Policies and Where to Find Them by David Kerber

The hardest thing in Computer Science is cache invalidation. The second hardest thing in Computer Science is naming things. For security, I think the hardest thing is understanding cloud identity models. The second hardest thing is also naming things.

One of the best ways in AWS to reduce the blast radius of attacks, or prevent attacks altogether, is to leverage the myriad of AWS policies that they make available to customers. But a word of caution from Kerber: the amount of tools you have at your disposal here can also be your downfall. In fact, as Chester Le Bron puts it:

You now need to become a SME in the operating system called AWS and its core services, some of which (like IAM) could be considered its own OS due their complexity

So, in this post, Kerber outlines every type of AWS policies to help manage access. There are several types, some allow you to Allow or Deny access, while others only Deny, and you can split these types across things like Users, Resources, Service Accounts and even GitHub Actions.

Luckily, each section is split up to help folks use this blog as a reference post in case you need to come back to remember. They also open-sourced a tool called iam-collect to help retrieve all of these policies locally for analysis. I’ll list the tool at the open-source section at the bottom of this week’s issue!

Introducing CheckMate for Auth0: A New Auth0 Security Tool by Shiven Ramji

CheckMate is a free Auth0 tenant configuration tool that operates as a CSPM for Auth0 deployments. They have several checks for all kinds of misconfigurations present in the Auth0 environment, and you can run them on an interval to detect drift of the environment and fix it before it becomes a problem. One of the cool parts here that is less CSPM-y from a pure security product perspective is their extensibility runtime checks. It’ll do several checks against custom Auth0 runners to find everything from hardcoded passwords to vulnerable npm packages.

☣️ Threat Landscape

UN Convention against Cybercrime opens for signature in Hanoi, Viet Nam by United Nations Office on Drugs and Crime

The United Nations host their “Convention on Cybercrime” in Vietnam last week. Besides sounding like a sick conference (I hope someone wore a hacker hoodie), they had 72 countries sign an international treaty that provides guidance and guardrails for nations to battle international cybercrime. The post has some interesting highlights from the treaty, including standards for electronic evidence collection, the ability to share data easily, and it recognizes that the dissemination of non-consensual sexual images is an offense.

Lessons from the BlackBasta Ransomware Attack on Capita by Will Thomas

Cyber threat intelligence G.O.A.T. Will Thomas dissected the 136-page ICO report on Capita Group’s breach by BlackBasta in 2023 for some juicy intelligence and lessons learned. The cool part of this is that Will found messages from the BlackBasta chat leak that line up with the timeline published in the ICO report.

It’s nice to get commentary from a CTI expert on publicly facing penalty notices and disclosures. Lessons learned are great at a high level, but digging into exact TTPs from BlackBasta and comparing them to the material failures within the security program at Capita are way more useful to the rest of the security community.

CVE-2025-59287 WSUS Unauthenticated RCE by Batuhan Er

This week, Microsoft released an out-of-band vulnerability update for its Windows Server Update Service (WSUS) product. WSUS allows Microsoft administrators to manage the installation Windows updates in their fleet. The deserialization vulnerability results in Remote Code Execution, so Microsoft labeled CVE-2025-59287 as a 9.8.

In this vulnerability walkthrough, Er follows the vulnerable code path and ends with a PoC to exploit the vulnerability. The discovery here is that WSUS deserializes encrypted XML objects unsafely in the GetCookie() endpoint. You can send over any arbitrary object (or a specially crafted one) to get RCE.

Exploitation of Windows Server Update Services Remote Code Execution Vulnerability (CVE-2025-59287) by Chad Hudson, James Maclachlan, Jai Minton, John Hammond and Lindsey O’Donnell-Welch

As a follow-up post to Er’s above, the Huntress team found in-the-wild exploitation of CVE-2025-59287. A handful of their customers had Internet-exposed WSUS servers. When the vulnerability details and subsequent PoCs dropped, attackers leveraged the exploit against exposed servers. Most of the activity looked like initial reconnaissance, but this post goes to show how fast you have to react to emerging vulnerabilities, especially when you have misconfigurations that could have prevented exploitation.

The team also dropped a Sigma rule and IoCs for readers to hunt on.

Hugging Face and VirusTotal: Building Trust in AI Models by Bernardo Quintero

This is a ~small product update for VirusTotal’s integration into HuggingFace’s registry of AI models. I usually don’t post product updates, but both VirusTotal and HuggingFace are community-driven products. It’s nice to see the VirusTotal team commit to helping developers identify malicious models hosted on HuggingFace.

🔗 Open Source

GitHub link for the Checkmate project that was open-sourced by the auth0 team. You can see all of their checks in code and it looks like it operates similarly to how prowler works.

Kerber’s iam-collect repo from the story I linked in State of the Art above. Give it access to your AWS environment and it’ll rip through the IAM policies and download them to disk. It links to a separate GitHub project called iam-lens to help simulate and evaluate effective permissions.

EmergingThreats/pdf_object_hashing

PDF Object hashing is a technique similar to imphash where you compare structures of PDF documents without focusing on the content inside. impash is a helpful technique with identifying similar binary features and symbols so you can cluster malware samples to find new ones. This follows the same philosophy so you can cluster malicious PDF documents using similar techniques.

I’ve been following Chainguard’s malcontent project for a while and it looks like they’ve been throwing a lot of development at it. It’s a supply-chain compromise detection system that uses a butt-ton (yes, a butt ton) of analysis techniques, including close to 15,000 YARA detections, to help detect these compromises before they make it into your build and production systems.

Machine-readable knowledge base of forensic artifact information. It has a good amount of yaml files that store metadata around specific sources and what files and directory paths you can use during forensic analysis.

Great read as always. Thanks, Zack.

Chaos Detection Engineering is necessary (and unheard of) due to a inherent problem with SOARs. I believe that SOAR made it easy for non-engineers to build code ("automations") via LC/NC (good!), but without knowing or applying the hard-won engineering solutions that help write good code, like Test-Driven Development, modular programming with functions instead of writing repetitive/hard-to-manage code, diff-style commit logs allowing precise change tracking, or pair programming. You can see this if you talk to a SOAR vendor - they emphasize how easy it is to *create* content and spend very little time talking about aiding maintenance/reliability of content.