Absolute measurement corrupts severity, absolutely

A repost/import from my other blog - a rant on alert severity calculations

I sometimes get so excited about an alert or a finding, then immediately feel a sense of dread because of this comic. Is this a bun? Who else cares besides me?

Tell me if you’ve been in this situation before: you are working as a (SOC Analyst, Detection Engineer, Security Researcher), and just created a new rule for your SIEM. It took blood, sweat, and tears to get it exactly how you wanted it. It has lots of unit tests, links to articles, and blogs that document the TTP you are trying to capture, and it is a high visibility rule because management asked for it. Now comes the dreaded question: what should the severity of the rule be, and how can you logically explain why it is set to a certain level?

You sit back and think. You’re a professional. A logical, creative and driven individual. “Well, management asked for it”, you say to yourself, “..especially after they read about that breach that this rule helps prevent, so I can’t just mark it an insignificant info or low. They’d question my judgment”! Alright, so it’s gotta be higher than low. That leaves medium, high, and critical.

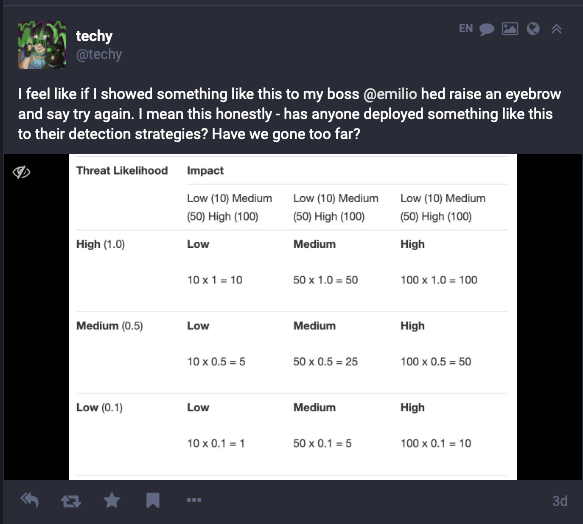

You pull up your organization’s Risk Severity Matrix document. In it, you sift through all kinds of mathematical equations to give you direction. The goal here is to apply rigor to your decision because without math; you’d just be making this up anyways.

The document is several matrices lined up with different colors and selection parameters: likelihood of occurrence, impact if successful, countermeasures in place to mitigate damage, should someone be woken up for this. It starts to feel like you are reading a different language. How the hell are YOU responsible for computing this?

After 30 mins, your brain starts to melt, and you let out a big sigh. “Screw it,” you say, “critical. Management wants it, my team wants it, it just seems important”. You deploy to production, pat yourself on the back and move on.

What’s the problem here?

I’ve been in this exact scenario many times in my career. You need to label an alert within a rule with a severity that you hope is accurate enough. You want an adequate severity because it would really suck to mess somebody’s night up because they get paged, and the alert is a nothing burger. Or worse - the alert triggered, but the severity wasn’t set high enough, so you did not wake that person up, and now the company is burned. So what gives? How did we get here? Why do we have so much power?

There are a lot of ways to measure the severity of a potential security alert. And I think we’ve taken it a bit far with the best ways to do it. The security industry latched onto this concept of being on-call because the bad guys never sleep, so why should we? So after we made THAT decision, we now need some logic behind what constitutes an alert that’s interesting from an alert that makes you go, “Oh, Shit.”

Here’s the problem. Brilliant people are in security. And because we have so many brilliant people, we devised clever ways to find bad things. And once we found those bad things, we let our lizard brains take us the next step further and say, “well, the only way to explain how bad this evil thing is, is to make something equally brilliant to quantify its severity.”

Look - I am not going to name and shame severity models. The only question I am asking - does this explain enough to my boss or my organization why someone should be interrupted, paged, or woken up? How does this help my alert explainability?

Alert severity explainability

I joined Mastodon after the crazy Twitter exodus and started thinking about ways I could write about this subject. I also had COVID. And so what do you do when you are bored, isolated, and have COVID? You start tweeting tooting your boss, the CISO!

I had a performance management review scheduled with Emilio the week after this toot, so I thought tagging him in this investigation would get me into his good graces 😇😇

After more and more Googling, I realized I should be careful. There have been a lot of smart people working on this problem. Instead, I realized the issue: everything I investigated focused on Absolute Measurement. A series of inputs are sent into an alert, and the output should be some severity on a scale from informational -> critical.

Severity = Probability of occurrence * Threat Actor Jerk Coefficient * (Compensating Control - Cups of Coffee) - abs(Last time I took a vacation - Burnout)

Not all threat actors are jerks, but many jerks are threat actors

Aha! Mathematics saves the day yet again! But here is the problem: humans are generally bad at absolute measurement.

Exhibit A is a study about how different outsourcing companies could give an absolute estimate of hours of work provided a set of specifications. It turns out they were all over the place. Culture, bias, expertise, and specification details (or lack thereof) played a role in how firms decided the cost of a project. So what can we do instead?

Security has to learn yet again from Dev and Ops: relative measurements to the rescue!

Estimating work for software is difficult. But developers and operations folks figured this out long ago with a little thing called Agile Development. Rather than being all waterfall-y, you can get a couple of sprints under your belt and compare feature requests and bug reports with work you’ve already done!

> "So I believe that a button on our website is a 2-point story because I worked on a slider for our website 2 sprints ago, and that was a 2-pointer, and the code is relatively the same.” - An unknown enlightened developer, Initech, 1998

Bingo! Relative measurement. Humans rock.

So how does this apply to alert severity? Well, we should embrace what we’ve done before. What does this specific rule look like compared to our library of rules? Similar TTPs, similar environment? Does it alert on a very specific case or a broad case? These may seem like absolute measurements, but you'd be surprised how good humans are at taking very vague details like the above and clustering them to other similar rules.

Once you can cluster that, it is easy to assign the severity because it’s just the same severity as the rules it was clustered in. And yeah, finger to the wind is totally fine here! No more math, justify why your PHP Webshell rule is a Medium that seems awfully similar to an ASP.NET Webshell rule, and bam! Deploy away.

Alert severity can be alert urgency. Different name, similar outcomes

What does it mean for day-to-day operations to have an informational, low, medium, high, and critical alert now that you can cluster? Well, this is where documentation and an SLA come into play. I’m pretty bullish on treating security as a ship in the ocean rather than an immovable fortress. You have to keep the ship afloat. So how would you classify problems within the ship, with increasing levels of severity, that go from something to note, to something interesting, to something catastrophic?

Sailing analogies aside, I am impressed with how the DevOps profession solved this issue years ago. My employer has a great blog on Alert Urgency (not severity!) and classifies alerts as Records, Notifications, and Pages. Atlassian has a 5 tier model that discusses service degradation and customer annoyance. Notice these all can fit into one page and can be quickly adapted.

Conclusion

TL;DR focus on relative measurement. There will be some upfront costs and pain, but it gets easier as time goes on. That pain is the same thing Agile teams go through with assigning points, but it quickly fades. Next, identify your severity model from a notification perspective rather than a threat perspective. You might realize they achieve the same thing without as much absolute measurement in trying to quantify threat scores.